What is LLM and Knowledge Graph Integration?

In today’s AI landscape, there are two key technologies that are transforming machine understanding, reasoning, and natural language processing: Large Language Models (LLMs) and Knowledge Graphs (KGs).

LLMs, like OpenAI’s GPT series or Meta’s Llama series, have shown incredible potential in generating human-like text, answering complex questions, and creating content across diverse fields.

Meanwhile, KGs help organize and integrate information in a structured way, allowing machines to understand and infer the relationships between real-world entities. They encode entities (such as people, places, and things) and the relationships between them, making them ideal for tasks such as question-answering and information retrieval.

Emerging research has demonstrated that the synergy between LLMs and KGs can help us create AI systems that are more contextually aware and accurate. In this article, we explore different methods for integrating the two, showing how this can help you harness the strengths of both.

Knowledge Graph and LLM Integration Approaches

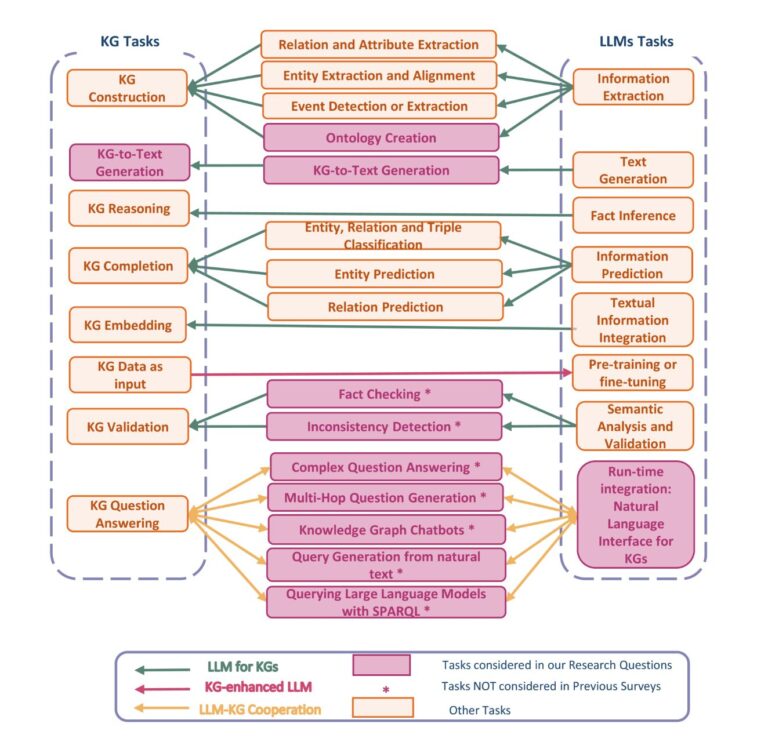

You can think of the interaction between LLMs and KGs in three primary ways.

First, there are Knowledge-Augmented Language Models, where KGs can be used to enhance and inform the capabilities of LLMs. Second, you have LLMs-for-KGs, where LLMs are used to strengthen and improve the functionality of KGs. Finally, there are Hybrid Models, where LLMs and KGs work together to achieve more advanced and complex results.

Let’s look at all three.

1. Knowledge-Augmented Language Models (KG-Enhanced LLMs)

A direct method to integrate KGs with LLMs is through Knowledge-Augmented Language Models (KALMs). In this approach, you augment your LLM with structured knowledge from a KG, thus enabling the model to ground its predictions in reliable data. For example, KALMs can significantly improve tasks like Named Entity Recognition (NER) by using the structured information from a KG to accurately identify and classify entities in text. This method allows you to combine the generative power of LLMs with the precision of KGs, resulting in a model that is both generative and accurate.

2. LLMs for KGs

Another approach is to use Large Language Models (LLMs) to simplify the creation of Knowledge Graphs (KGs). LLMs can assist in creating the knowledge graph ontology. You can also use LLMs to automate the extraction of entities and relationships. Additionally, LLMs help with the completion of KGs by predicting missing components based on existing patterns, as seen with models like KG-BERT. They also ensure the accuracy and consistency of your KG by validating and fact-checking information against the corpora.

3. Hybrid Models (LLM-KG Cooperation)

Hybrid models represent a more complex integration, where KGs and LLMs collaborate throughout the process of understanding and generating responses. In these models, KGs are integrated into the LLM’s reasoning process.

One such approach is where the output generated by an LLM is post-processed using a Knowledge Graph. This ensures that the responses provided by the model align with the structured data in the graph. In this scenario, the KG serves as a validation layer, correcting any inconsistencies or inaccuracies that may arise from the LLM’s generation process.

Alternatively, you can build the AI workflow such that the LLM prompt is created by querying the KG for relevant information. This information is then used to generate a response, which is finally cross-checked against the KG for accuracy.

Benefits of Knowledge Graph and LLM Integration

There are numerous benefits to integrating LLMs with Knowledge Graphs. Here are a few.

1. Enhanced Data Management

Integrating KGs with LLMs allows you to manage data more effectively. KGs provide a structured format for organizing information, which LLMs can then access and use to generate informed responses. KGs also allow you to visualize your data, which you can use to identify any inconsistencies. Very few data management systems provide the kind of flexibility and simplicity that KGs offer.

2. Contextual Understanding

By combining the structured knowledge of KGs with the language processing abilities of LLMs, you can achieve a deeper contextual understanding of AI systems. This integration allows your models to use the relationships between different pieces of information and helps you build explainable AI systems.

3. Collaborative Knowledge Building

The KG-LLM integration also helps create systems where KGs and LLMs continuously improve upon each other. As the LLM processes new information, your algorithm can update the KG with new relationships or facts which, in turn, can be used to improve the LLM’s performance. This adaptive process can ensure that your AI systems continually improve and stay up-to-date.

4. Dynamic Learning

By leveraging the structured knowledge that KGs provide, you can build LLM-powered AI systems in fields such as healthcare or finance, where data is dynamic and constantly evolving. Keeping your KG continuously updated with the latest information ensures that LLMs have access to accurate and relevant context. This enhances their ability to generate precise and contextually appropriate responses.

5. Improved Decision-Making

One of the most significant benefits of integrating KGs with LLMs is the enhancement of decision-making processes. By grounding its decisions in structured, reliable knowledge, your AI system can make more informed and accurate choices, reducing the likelihood of errors and hallucinations and improving overall outcomes. An example of this is a GraphRAG system, which is increasingly being used to augment LLM responses with factual, grounded data that wasn’t a part of its training dataset.

Challenges in LLM and Knowledge Graph Integration

1. Alignment and Consistency

One of the main challenges you may face in integrating KGs with LLMs is ensuring alignment and consistency between the two. Since KGs are structured, while LLMs are more flexible and generative, aligning the outputs of an LLM with the structure and rules of a KG can be challenging. To ensure that both systems work together, you will need to create a mitigator that’s responsible for prompting the LLM, as well as issuing KG queries when the LLM needs additional context.

2. Real-Time Querying

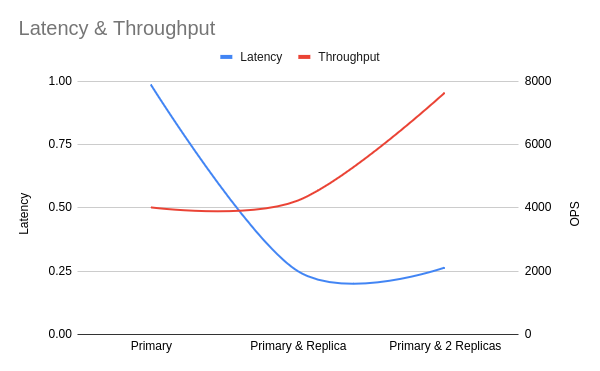

Another challenge is real-time querying. While KGs can provide highly accurate and structured information, querying them in real-time can be computationally expensive and time-consuming. This can be a significant hurdle if you’re looking to integrate KGs with LLMs for real-time applications, such as chatbots or virtual assistants. To surpass this, you should ensure that your KG is low-latency and highly scalable.

3. Scalability

As your data grows, so does the complexity of managing and integrating KGs with LLMs. Scalability is a significant challenge, as both the KG and the LLM need to be updated and maintained as new information becomes available. This requires that you architect your infrastructure to scale and use scalable technology to power your KG queries.

4. Handling Hallucinations and Inaccuracies

While KGs can help mitigate the issue of hallucinations in LLMs, eliminating them remains a challenge. Ensuring that your LLM only generates factually accurate information requires ongoing validation and refinement of both the model and the KG. Additionally, addressing inaccuracies within the KG itself can further complicate the integration process. Wherever possible, you should use KG visualization tools to inspect and improve the KG data.

| Category | Benefits | Challenges |

|---|---|---|

| Enhanced Data Management | - KGs offer structured data organization. - Visualization helps identify inconsistencies. - High flexibility and simplicity. |

- Ensuring consistency between structured KGs and flexible LLMs. |

| Contextual Understanding | - Deeper contextual understanding by combining KG relationships with LLMs' processing abilities. - Builds explainable AI systems. |

- Aligning LLM outputs with KG structure and rules can be challenging. |

| Collaborative Knowledge Building | - Continuous improvement as KGs and LLMs update each other. - Adaptive process ensures AI systems stay current. |

- Handling updates and maintaining alignment as new data is introduced can be complex. |

| Dynamic Learning | - KGs provide up-to-date context for LLMs. - Enhances AI responses in dynamic fields like healthcare or finance. |

- Managing real-time querying is computationally expensive and time-consuming. |

| Improved Decision-Making | - AI decisions are grounded in reliable, structured knowledge. - Reduces errors and hallucinations, improving outcomes. |

- Ensuring accuracy requires ongoing validation and refinement of both the LLM and the KG. |

| Scalability | - Allows for managing growing data complexities with structured knowledge. | - Scaling infrastructure to handle growing data and maintaining updated LLM and KG can be challenging. |

| Handling Hallucinations | - KGs mitigate LLM hallucinations. | - Eliminating inaccuracies within LLM and KG data remains a challenge. - Visualization tools may be needed to improve data quality. |

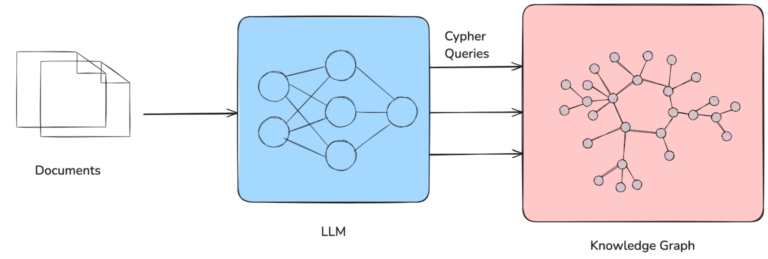

How LLMs Help in the Knowledge Graph Creation Process

LLMs are increasingly playing a vital role in the Knowledge Graph creation process. LLMs like the GPT-4o series, Cohere models, Gemini models, or variants of Llama 3.1 and Mistral all demonstrate powerful capabilities in detecting entities and relationships from natural language text, which can be used for creating KGs.

By selecting the right LLM, you can automate the extraction of entities and relationships from your data, ensuring that the graph you create is comprehensive, accurate, and continuously updated. This is particularly valuable in domains where data is constantly evolving, making manual updates to the knowledge graph cumbersome or difficult.

- Entity Resolution: LLMs can significantly enhance the entity resolution process in KG creation by accurately identifying and linking entities across different data sources. This helps you create a more consistent and reliable KG which, in turn, improves the performance of your AI systems.

- Tagging of Unstructured Data: By leveraging the language comprehension abilities of LLMs, you can efficiently tag unstructured data with relevant entities and relationships. This makes it easier to integrate unstructured data into your KG, expanding its scope and utility.

- Entity and Class Extraction: LLMs can efficiently extract entities and classes from datasets. This step is crucial for creating a comprehensive KG. By automating this process, you can reduce the time and effort required to build and maintain your KG.

- Ontology Alignment: LLMs can assist in aligning different ontologies within your KG, ensuring consistency and logic in the relationships between entities. This alignment is essential for maintaining the integrity and accuracy of your KG as it grows.

Enhancing Knowledge Graph Creation with LLMs

To streamline the creation of KGs, you can use large language models (LLMs) like GPT-4o models. You can prompt the LLM in the following manner:

“Extract key entities and relationships from the following text and generate corresponding Cypher queries:

In a study, Drug X was tested on Disease Y by Dr. Alice Johnson from Harvard University during Phase II of the trial, showing significant improvement in patient outcomes.”

MERGE (d:Drug {name: "Drug X"})

MERGE (disease:Disease {name: "Disease Y"})

MERGE (person:Person {name: "Dr. Alice Johnson"})

MERGE (institution:Institution {name: "Harvard University"})

MERGE (phase:TrialPhase {name: "Phase II"})

MERGE (outcome:Outcome {description: "Significant improvement"})

MERGE (d)-[:TREATS]->(disease)

MERGE (person)-[:STUDIED_BY]->(d)

MERGE (d)-[:PART_OF]->(phase)

MERGE (person)-[:ASSOCIATED_WITH]->(institution)

MERGE (d)-[:RESULTED_IN]->(outcome)

As new data comes in, you can prompt the LLM to extract new entities and relationships and update the KG.

For example, if the new input is:

“A follow-up Phase III study of Drug X led by Dr. Bob Smith at Stanford University confirms earlier findings.”

The generated Cypher queries would be:

MERGE (d:Drug {name: "Drug X"})

MERGE (person:Person {name: "Dr. Bob Smith"})

MERGE (institution:Institution {name: "Stanford University"})

MERGE (phase:TrialPhase {name: "Phase III"})

MERGE (d)-[:PART_OF]->(phase)

MERGE (person)-[:STUDIED_BY]->(d)

MERGE (person)-[:ASSOCIATED_WITH]->(institution)

Therefore, using LLMs, you can significantly reduce the time it takes to create a KG. You can also keep the KG updated automatically using the LLM-generated queries.

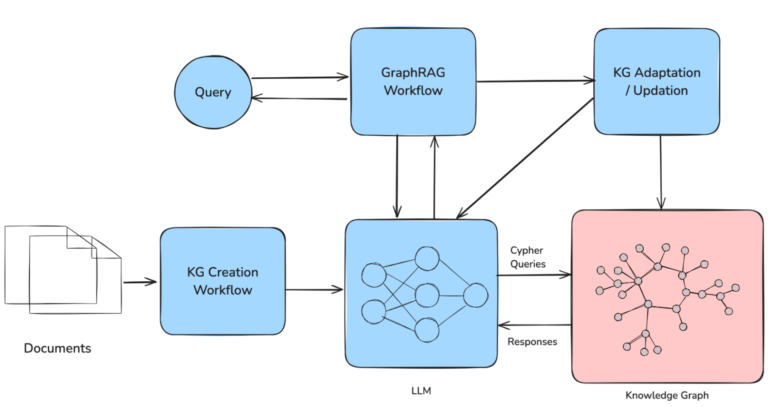

Improving LLM Performance with Knowledge Graphs

Alternatively, integrating Knowledge Graphs (KGs) into Large Language Models (LLMs) can significantly enhance their performance. In this method, before generating a response with an LLM, you can query a KG to retrieve relevant context.

For instance, if you’re working on a healthcare chatbot and need to answer a question about drug interactions, you can first query the KG using the Cypher match query:

// Query to find interactions of Drug X

MATCH (drug:Drug {name: "Drug X"})-[:INTERACTS_WITH]->(interaction:Drug)

RETURN interaction.name AS InteractingDrugs

Once you have the relevant data from the KG, incorporate it into the LLM prompt. This approach helps the model generate more accurate and contextually relevant responses:

import openai

# Assume you retrieved the following data from the KG

interacting_drugs = ["Drug Y", "Drug Z"]

# Create a prompt using KG data

prompt = f"""

A patient is taking Drug X. What should be considered in terms of drug interactions?

Note that Drug X interacts with {', '.join(interacting_drugs)}.

"""

# Call the LLM with enhanced prompt

response = openai.ChatCompletion.create(

model="gpt-4o",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": prompt}

]

)

print(response.choices[0].message['content'])

Finally, as the LLM processes more queries and provides new information, you can update the KG to reflect new knowledge. For instance, if the LLM correctly identifies a new drug interaction, you can insert this relationship into the KG:

// Update the KG with new interaction

MERGE (drug:Drug {name: "Drug X"})

MERGE (new_interaction:Drug {name: "Suggested Drug"})

MERGE (drug)-[:INTERACTS_WITH]->(new_interaction)

This continuous learning loop allows both the LLM and the KG to evolve together and keeps your AI system up to date.

How LLMs Retrieve Information from Knowledge Graphs and Their Accuracy

1. Retrieval Methods

LLMs can retrieve information from KGs using a variety of methods, including direct queries, embeddings, and graph traversal algorithms. These methods allow the LLM to access the structured knowledge in the KG and use it to inform its outputs.

2. Accuracy Factors

The accuracy of the information retrieved by LLMs from KGs depends on several factors, including the quality of the KG, the retrieval method used, and the context in which the information is applied. By optimizing these factors, you can improve the accuracy of your LLM’s outputs.

3. Accuracy of Retrieved Information

Ensuring the accuracy of the information retrieved by LLMs from KGs is critical to maintaining the reliability of your AI system. Regular validation and refinement of both the LLM and the KG are necessary to ensure that your AI system provides accurate and contextually relevant information.

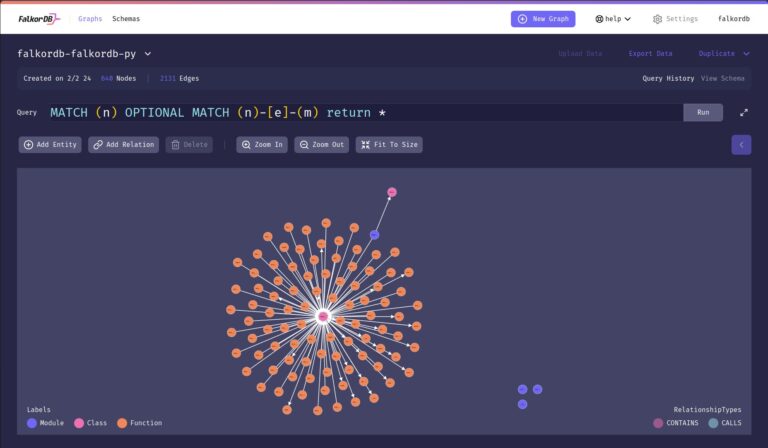

How FalkorDB Solutions Can Help

When you’re looking to integrate Knowledge Graphs (KGs) with Large Language Models (LLMs) for advanced AI applications, FalkorDB offers a cutting-edge and scalable solution designed to meet the demands of high-performance, real-time knowledge retrieval. It also offers graph visualization, which simplifies the process of building and managing graph data.

FalkorDB stands out because of its unique ability to efficiently represent and query structured data, capturing complex relationships between entities such as people, places, events, and more. This is particularly beneficial when you’re dealing with large datasets that require precise and contextually relevant answers. The database’s ease of integration with LLMs allows for dynamic interactions where LLMs can retrieve and use data from the KG in real time, enhancing the overall performance of your AI systems.

Moreover, FalkorDB’s GraphRAG (Graph Retrieval-Augmented Generation) capabilities ensure that the LLM can not only retrieve the most accurate information but also generate outputs that are contextually enriched and factually correct. Additionally, it supports both KG queries and vector search, which can be highly beneficial for building applications in domains where accuracy and precision are paramount.

Conclusion

The integration of Large Language Models (LLMs) with Knowledge Graphs (KGs) represents a powerful advancement in artificial intelligence, enabling systems to combine the natural language processing strengths of LLMs with the structured, relational data stored in KGs. This synergy allows for enhanced contextual understanding, dynamic learning, and improved decision-making capabilities, making your AI applications more robust and accurate.

However, integrating these technologies isn’t without its challenges. Issues such as alignment and consistency, real-time querying, scalability, and the handling of hallucinations need to be carefully managed. To address these challenges and maximize the benefits of LLM and KG integration, tools like FalkorDB offer a compelling solution.

FalkorDB is specifically designed to handle the high-performance demands of integrating KGs with LLMs. Its low-latency querying capabilities and advanced GraphRAG (Graph Retrieval-Augmented Generation) features ensure that your AI systems can deliver fast, accurate, and contextually enriched responses. Whether you’re managing complex data environments in legal, security, or corporate domains, FalkorDB provides the infrastructure necessary to build reliable, high-performance AI applications.

By leveraging FalkorDB, you can effectively harness the full potential of LLMs and KGs, enabling your AI systems to operate with greater precision, speed, and reliability. To get started refer to the FalkorDB docs, sign up to the FalkorDB cloud, or follow this tutorial.