Retrieval-Augmented Generation (RAG) has become a mainstream approach for working with large language models (LLMs) since its introduction in early research. At its core, RAG gathers knowledge from various sources and generates answers using a language model. However, with basic RAG, also known as Naive RAG, you may encounter challenges in obtaining accurate results for complex queries and face slow response times and higher costs when dealing with large datasets.

To address these challenges, researchers have developed several advanced RAG techniques. This article provides an overview of these advanced methods to help you achieve better results when Naive RAG falls short.

Understanding Retrieval-Augmented Generation (RAG)

Every RAG application can be broken down into two phases: retrieval and generation. First, RAG retrieves relevant documents or knowledge snippets from external sources, such as knowledge graphs or vector stores, using search and indexing techniques. This retrieved data is then fed into a language model, which generates contextually rich and accurate responses by synthesizing the retrieved information with its pre-trained knowledge.

RAG systems have evolved as the requirements have become more complex. You can now classify a RAG system into one of the following categories.

Naive RAG

This is the most basic form of RAG, where the system directly uses the retrieved data as input for the generation model without applying advanced techniques to refine the information. It also doesn’t incorporate any enhancements during the generation step.

Modular RAG

This architecture separates the retrieval and generation components into distinct, modular parts. It allows for flexibility in swapping out different retrieval or generation models without disturbing the entire code.

Advanced RAG

In advanced RAG, complex techniques like re-ranking, auto-merging, and advanced filtering are used to improve either the retrieval step or the generation step. The goal is to ensure that the most relevant information is retrieved in the shortest time possible.

Advanced RAG techniques improve upon the efficiency, accuracy, and relevance of information retrieval and subsequent content generation. By applying these methods, you can tackle complex queries, handle diverse data sources, and create more contextually aware AI systems.

Let’s explore some of these techniques in detail.

Advanced RAG Techniques

In this section, we’ll categorize advanced RAG techniques into four areas: Pre-Retrieval and Data-Indexing Techniques, Retrieval Techniques, Post-Retrieval Techniques, and Generation Techniques.

Pre-Retrieval and Data-Indexing Techniques

Pre-retrieval techniques focus on improving the quality of the data in your Knowledge Graph or Vector Store before it is searched and retrieved. You can use them where data cleaning, formatting, and organization of the information is needed.

Clean, well-formatted data improves the quality of the data retrieved which, in turn, influences the final response generated by the LLM. Noisy data, on the other hand, can significantly degrade the quality of the retrieval process, leading to irrelevant or inaccurate responses from the LLM.

Below are some of the ways you can pre-process your data.

#1 - Increase Information Density Using LLMs

When working with raw data, you often encounter extraneous information or irrelevant content that can introduce noise into the retrieval process. For example, consider a large dataset of customer support interactions. It would include lengthy transcripts of useful insights as well as off-topic or irrelevant content. You would want to increase information density before the data ingestion step, to achieve higher-quality retrieval.

One approach to achieve this is by leveraging LLMs. LLMs can extract useful information from raw data, summarize overly verbose text, or isolate key facts, thereby increasing the information density. You can then convert this denser, cleaner data into a knowledge graph using Cypher queries or embeddings for more effective retrieval.

#2 - Deduplicate Information in Your Data Index Using LLMs

Data duplication in your dataset can affect retrieval accuracy and response quality, but you can address this with a targeted technique.

One approach is to use clustering algorithms like K-means, which group together chunks of data with the same semantic meaning. These clusters can then be merged into single chunks using LLMs, effectively eliminating duplicate information.

Consider the example of a corporate document repository that contains multiple policy documents related to customers. The same information might be present in the following way:

Document 1: “Employees must ensure all customer data is stored securely. Customer data should not be shared without consent.”

Document 2: “All customer data must be encrypted. Consent is required before sharing.”

Document 3: “Ensure customer data is securely stored. Do not share customer data without obtaining consent from the right stakeholders.”

The deduplicated text will be:

Consolidated Text: “Customer data must be securely encrypted and stored, and sharing of customer data is prohibited without explicit consent.”

Researchers have employed similar techniques to produce high-quality pre-training data for LLMs.

#3 - Improve Retrieval Symmetry With a Hypothetical Question Index

Hypothetical Question Indexing uses a language model to generate one or more questions for each data chunk stored in the database. These questions can later be used to inform the retrieval step.

During retrieval, the user query is semantically matched with all the questions generated by the model. Similar questions to the user query are then retrieved, and the chunk pointing to the most similar question is then passed on to the LLM to generate a response.

The key to this method is allowing the LLM to pre-generate the questions and store them along with the document chunks.

How Simple Chunking Works

Simple chunking is a method used in text processing to break down large pieces of text into smaller, more manageable portions. This technique involves dividing documents into fixed-sized segments, with some portions of these segments overlapping. The main purpose of this overlap is to ensure that each segment keeps some context from the preceding one.

Implementation Details

To implement simple chunking, you determine a specific size for each segment—say, 35 characters. Additionally, you decide on an overlap size that maintains context between segments. This method is straightforward and can be applied easily, making it suitable for fairly simple documents.

However, it might not fully leverage the structure of more intricate texts, as it relies purely on fixed sizes rather than the inherent organization of the document.

Pros and Cons of Simple Chunking

Pros:

- Simplicity: This method is easy to set up and requires minimal computational resources.

- Consistent Context: The overlapping parts help maintain some continuity between segments, which is beneficial for straightforward types of text.

Cons:

- Loss of Semantic Integrity: The fixed-size approach might cut off logical units of text, leading to segments that don’t make sense on their own.

- Decreased Performance in Complex Texts: Because it doesn’t consider document structure or natural breaks, it may not work efficiently with more complex documents, impacting retrieval and understanding.

How Semantic Chunking Works

Semantic chunking is a method of dividing text into segments based on their inherent meaning rather than adhering to predetermined lengths. This ensures that each segment, or “chunk,” encapsulates a complete and meaningful portion of information.

How is Semantic Chunking Implemented?

Identifying Similar Sentences: Begin by transforming sentences into embeddings, which are numerical vector representations that capture their meanings.

Calculating Semantic Similarity: Use cosine distance to measure how similar these sentence embeddings are to each other. When the cosine distance falls below a specified threshold, the sentences are considered semantically similar.

Forming Meaningful Chunks: Group semantically similar sentences together. This results in chunks of varying lengths, each one maintaining a coherent meaning based on content.

Benefits and Challenges

Benefits: This approach creates chunks that are contextually relevant and coherent, greatly enhancing retrieval accuracy and meaning preservation.

Challenges: Semantic chunking typically requires performing forward passes through advanced models such as BERT, making it more computationally demanding compared to simpler chunking methods.

Retrieval Techniques

These techniques involve optimizing the process of retrieving relevant information from the underlying data store. This includes implementing indexing strategies to efficiently organize and store data, utilizing ranking algorithms to prioritize results based on relevance, and applying filtering mechanisms to refine search outputs.

#1 - Optimize Search Queries Using LLMs

This technique restructures the user’s query into a format that is more understandable by the LLM and usable by retrievers. Here, you first process the user query through a fine-tuned language model to optimize and structure it. This process removes any irrelevant context and adds necessary metadata, ensuring the query is tailored to the underlying data store.

GraphRAG applications already utilize this technique which contributes to their effectiveness. In these systems, the LLM translates the user query into knowledge graph Cypher queries, which are then used to query the knowledge graph and retrieve relevant information.

#2 - Apply Hierarchical Index Retrieval

You can also use hierarchical indexing to enhance the precision of RAG applications. In this approach, data is organized into a hierarchical structure, with information categorized and sub-categorized based on relevance and relationships.

The retrieval process begins with broader chunks or parent nodes, followed by a more focused search within smaller chunks or child nodes linked to the selected parent nodes. Hierarchical indexing not only improves retrieval efficiency but also minimizes the inclusion of irrelevant data in the final output.

#3 - Fix Query-Document Asymmetry With Hypothetical Document Embeddings (HyDE)

The HyDE technique is the opposite of the Hypothetical Question Indexing method described earlier. You can use this technique to perform more accurate data retrieval from the database.

In this technique, a hypothetical answer is generated by a language model based on the query. The search is then performed in the database using the generated answer to fetch the best matching data in the store.

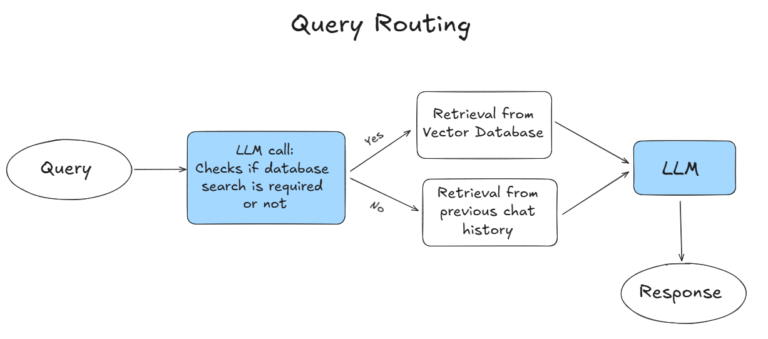

#4 - Implement Query Routing or a RAG Decider Pattern

Query routing involves directing different types of queries to the most appropriate retrieval or generation module within your system. This approach ensures that each query is handled by the most suitable algorithm or data source, optimizing accuracy and efficiency.

For example, a query router can decide whether to retrieve information from a knowledge graph or a vector store. It can also determine if retrieval is necessary or if the relevant data is already present in the LLM’s context. The router might also navigate through an index hierarchy that includes both summaries and document chunk vectors for multi-document storage.

This selection process is guided by an LLM, which formats the outcome to route the query correctly. In more complex scenarios, the routing may extend to sub-chains or additional agents, as seen in the Multi-Documents Agent model.

#5 - Self-Query Retrieval

Self-query retrieval is a technique in which the language model (LLM) generates follow-up queries based on the initial user query to retrieve more precise information. For example, this method allows for the extraction of metadata from the user’s query, enabling a search over filtered data to achieve more accurate results.

To implement this technique, you first define metadata fields for the documents in your vector store or knowledge graph. Next, you initialize a self-query retriever that connects your LLM with the store. With each user query, the system executes structured searches against the vector store or knowledge graph, facilitating both semantic (or graph-driven) and metadata-based retrieval. This process enhances the accuracy and relevance of the results obtained during the retrieval step. Finally, you can prompt the LLM with the retrieved data to generate informed and contextually appropriate responses.

#6 - Hybrid Search

Hybrid search combines traditional keyword-based search with semantic search techniques. In this technique, you create both a keyword index and a vector-embeddings index. When the search is performed, it performs both keyword search and semantic search to retrieve results. The final results are ranked using a reranker model that scores their relevance to the user query.

This approach increases relevance, improves coverage, and offers flexibility for various query types. Hybrid search is especially useful in domains with strict vocabularies. For example, in the healthcare domain, doctors often use abbreviations like “COPD” for Chronic Obstructive Pulmonary Disease or “HTN” for Hypertension. In such cases, a semantic search might miss results with abbreviations, which keyword search can help capture.

#7 - Graph Search

Graph search leverages knowledge graphs to enhance information retrieval. By representing your data as a graph, where nodes represent entities and edges denote relationships, you can uncover complex connections that keyword search or semantic search methods might miss. When you input a query, the graph search algorithm navigates these relationships, allowing you to retrieve not just direct matches but also contextually relevant information based on the interconnectedness of the data.

This method is particularly useful for applications involving complex datasets where understanding relationships is key to gaining insights. To use Graph Search, you must first convert your data into knowledge graphs using LLMs, and then use Cypher queries to retrieve data based on user queries.

#8 - Fine-tuning Embedding Models

Fine-tuning embedding models is crucial for enhancing retrieval performance by tailoring models more precisely to your specific dataset. Here’s why:

Getting more from your model

- Initial Foundation: Pre-trained models offer a solid foundation based on large amounts of generic data. However, their general training might not capture the nuances of specialized domains.

- Targeted Optimization: By fine-tuning, you’re effectively narrowing the model’s focus to better reflect the unique aspects of your data, leading to more precise retrieval results.

Customized Understanding

- Selecting Specialized Models: It’s important to choose models that align with your domain. For instance, in the medical domain, opting for models trained on comprehensive data sources like PubMed can provide a relevant starting point.

- Enhanced Contextual Recognition: Fine-tuning with examples from your own dataset—both similar and dissimilar pairs—helps the model grasp domain-specific relationships. This makes it more adept at understanding the context and semantics of your queries.

Greater Retrieval Accuracy

- Refining Through Data: By carefully curating data and using it to train the model, you enable it to differentiate more effectively between relevant and irrelevant information. This process sharpens the model’s ability to retrieve the most pertinent results.

- Better Performance Metrics: The outcome of fine-tuning is a marked improvement in retrieval accuracy and efficiency, facilitating better user experiences and decision-making.

Fine-tuning enhances a generally effective model into a powerful, domain-specific tool, increasing retrieval performance overall.

Post-Retrieval Techniques

These techniques involve enhancing the retrieval process from large datasets by focusing on refining and improving search outputs. The techniques outlined below help improve the LLM prompt by reranking results, verifying the retrieved context, or improving the query.

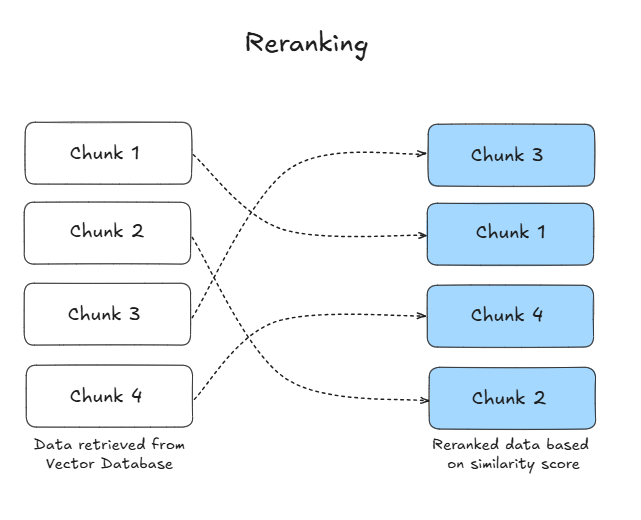

#1 - Prioritize Search Results With Reranking

Reranking is one of the most commonly used techniques in Retrieval-Augmented Generation (RAG) and is applied after the data is retrieved from the database. In this method, the retrieved data is re-ranked using a reranker model, which sorts the documents based on their relevance to the query.

Reranker models, such as Cohere’s Rerank3, are specialized AI models that assess and prioritize the relevance of these retrieved documents in relation to a user’s query. Operating on a smaller set of candidate documents, these models focus on fine-tuning rankings based on the context of both the query and the documents. Typically trained on datasets containing examples of relevant and irrelevant documents, rerankers can effectively distinguish high-quality results from less relevant ones.

By integrating reranking into your RAG workflow, you enhance the accuracy and relevance of the information presented, ultimately leading to better responses.

#2 - Optimize Search Results With Contextual Prompt Compression

You can use this method when you want to reduce the size of prompts while retaining the essential information needed for accurate retrieval. By compressing prompts, you streamline your system’s focus on the most relevant context. You also save costs by reducing compute requirements, or the number of tokens used during LLM API calls.

Contextual Prompt Compression is particularly useful when dealing with large datasets or when you need to maintain efficiency without sacrificing the accuracy of your responses.

#3 - Score and Filter Retrieved Documents With Corrective RAG

To refine your search results, you can employ Corrective Retrieval-Augmented Generation (Corrective RAG or CRAG). This technique involves scoring and filtering the retrieved documents based on their relevance and accuracy concerning your query.

CRAG introduces a lightweight retrieval evaluator that assesses the overall quality of the retrieved documents, providing a confidence degree that triggers different knowledge retrieval actions, such as “Correct,” “Incorrect,” or “Ambiguous.” CRAG can also address limitations in static corpora by incorporating web searches to decide if the retrieved results are relevant.

The correct chunks are then sent to the language model for rephrasing and presenting a response to the user. CRAG helps eliminate irrelevant data retrieved from the database.

#4 - Query Expansion

Query Expansion is a powerful technique you can use to improve the relevance of your search results. By broadening your original query to include related terms, synonyms, or alternative phrases, you increase the chances of retrieving more comprehensive information.

This is especially helpful in situations where the initial query might be too narrow or specific. Query Expansion allows your system to cast a wider net during the retrieval step, ensuring that your search captures all pertinent information.

This approach enhances the accuracy and coverage of your retrieval process, leading to more informative and useful responses.

Generation Techniques

Generation techniques involve optimizing the LLM response. This includes training models to understand context, employing fine-tuning methods to customize responses for specific tasks, and applying feedback mechanisms to enhance output quality.

#1 - Tune Out Noise With Chain-of-Thought Prompting

You can enhance the accuracy of your AI responses by using Chain-of-Thought (CoT) Prompting. This technique involves guiding your system through a logical sequence of steps or “thoughts”, helping it focus on relevant information while filtering out noise. By following a chain of thought, your model can better understand the context and intent behind your query, leading to more precise and relevant results.

Chain-of-thought prompting typically involves providing your model with examples of how to approach a specific type of query, where you demonstrate the thought process and intermediate steps required to arrive at a satisfactory answer. This approach helps the model develop a more nuanced understanding of the information.

Chain-of-thought prompting is particularly effective when dealing with complex queries, where the LLM needs to reason to generate the final response. Frameworks like DSPy are particularly capable of Chain-of-Thought prompting.

#2 - Make Your System Self-Reflective With Self-RAG

Self-RAG is an advanced technique that empowers your system to refine its own retrieval and generation process by iterating on its outputs. In Self-RAG, the model doesn’t just rely on the initial retrieval but actively re-evaluates and adjusts its approach by generating follow-up queries and responses. This iterative process allows the model to correct its own mistakes, fill in gaps, and enhance the quality of the final output.

You can think of Self-RAG as your model’s ability to self-correct and improve its answers. By generating an initial response, evaluating its accuracy and relevance, and then adjusting the retrieval process accordingly, the model can produce more nuanced and accurate answers. This approach is particularly useful in scenarios where a single round of retrieval might not be sufficient to provide the best possible answer. With Self-RAG, you enable your system to be more adaptive and precise.

#3 - Fine-Tuning LLMs

Fine-tuning your model is a powerful way to ensure it adapts to the specific vocabulary and nuances of your domain, focusing on what truly matters in your data. This process involves taking a pre-trained language model and training it further on a smaller, domain-specific dataset, allowing the model to prioritize relevant information and filter out noise. By exposing the model to examples of the types of queries and responses you expect, it learns the subtle distinctions and context that are unique to your particular use case.

You can fine-tune your model using techniques like Parameter-Efficient Fine-Tuning (PeFT), Low-Rank Adaptation (LoRA), and Quantized LoRA (qLoRA). These methods allow you to adapt large models efficiently without retraining the entire model from scratch. Additionally, no-code tools like LlamaFactory or Axolotl make the fine-tuning process more accessible, even if you don’t have deep technical expertise.

When you fine-tune your model, you’re essentially refining its understanding of your specific data, which is particularly useful in specialized fields where domain-specific knowledge is critical and might not be fully covered by the original training data. This fine-tuning enhances the model’s precision, ensuring it generates responses that are highly relevant to the context of your queries.

#4 - Use Natural Language Inference to Make LLMs Robust Against Irrelevant Context

To enhance the robustness of your RAG system against irrelevant context, it’s important to ensure that retrieved information improves performance when relevant and doesn’t harm it when irrelevant. Recent research highlights that noisy retrieval, especially in multi-hop reasoning, can cause cascading errors.

One effective strategy is to use a Natural Language Inference (NLI) model to filter out irrelevant passages. The NLI model assesses whether the retrieved context supports the question-answer pairs, helping your system ignore unhelpful information.

Additionally, training RAG models with a mix of relevant and irrelevant contexts can fine-tune them to better discern useful information from noise. You can use DSPy, for instance, as it has auto-optimizers that help fine-tune LM responses based on a dataset that you provide.

Implementing Advanced RAG

Implementing advanced Retrieval-Augmented Generation (RAG) techniques can significantly enhance the performance and accuracy of your AI applications. To make this process easier, you can leverage tools and libraries like LangChain, LlamaIndex, and DSPy, which offer powerful modules to help you integrate these advanced RAG strategies into your workflows.

The methods discussed in this article focus on three key areas:

- Data Pre-Processing: Ensuring the quality of your data at the ingestion stage.

- Retrieval Stage: Improving the retrieval process by using contextually relevant data.

- Generation Stage: Refining the generation step by ensuring accurate retrieval and fine-tuning your LLM.

To achieve these objectives, your data must be clean, well-structured, and stored in a system capable of precise searches. Traditional vector embeddings and semantic searches may not always suffice for this task.

This is where knowledge graphs and GraphRAG come into play as the future paradigm for building RAG applications. With GraphRAG:

- You convert your data into a structured knowledge graph, creating a high-quality, information-dense system.

- You structure your queries as graph queries, which allows for more efficient retrieval and reasoning.

- Your LLM can then reason over the underlying graph to deliver more accurate and contextually rich responses.

GraphRAG systems are inherently information-dense, and hierarchical, and use structured queries, making it easier for you to incorporate many of the advanced tactics discussed. Additionally, the ability to visualize your underlying data and make adjustments can further enhance accuracy and overall system performance.

How Can FalkorDB Help with Advanced RAG Optimization?

FalkorDB is a specialized low-latency store designed to optimize the storage, retrieval, and processing of data. It is ideal for implementing advanced RAG (Retrieval-Augmented Generation) techniques because it supports both Cypher queries and semantic searches.

Here’s why you should explore FalkorDB for implementing advanced RAG techniques:

- Knowledge Graph Support: FalkorDB excels in organizing data into knowledge graphs, an approach that aligns with advanced RAG methods, such as hierarchical indexing, graph search, and hybrid search. You can read our article on knowledge graph vs vector database to understand why FalkorDB provides a powerful new way to build your retrieval step.

- Seamless Integration with LLMs: FalkorDB is built to integrate seamlessly with LLMs, and can be easily used to build advanced RAG techniques like self-query retrieval and query expansion. This integration enables you to leverage both structured and unstructured data effectively, facilitating more sophisticated query handling and response generation. Whether you’re using a knowledge graph, semantic search, or both, FalkorDB ensures that your LLM can utilize the most relevant data quickly and accurately.

- Support for GraphRAG: With FalkorDB, you can easily implement GraphRAG techniques by converting your data into structured knowledge graphs. This capability allows you to create a high-quality, information-dense system that enhances retrieval accuracy and efficiency. FalkorDB’s robust query capabilities, including support for Cypher queries, make it easier for you to navigate complex data relationships and retrieve information that is contextually relevant to your queries.

- Low-Latency and Scalability: FalkorDB is optimized for handling large-scale datasets, making it an excellent choice for advanced RAG applications that require multi-step retrieval. Its low-latency responses and ability to scale efficiently ensure that your system remains responsive even as your data grows in size and complexity. This is particularly important for techniques involving multi-hop reasoning or large, diverse datasets, where quick retrieval and processing are critical.

FalkorDB is a powerful tool that can help you incorporate advanced RAG techniques. You can explore the following modules to learn more about its capabilities:

Next steps

Advanced RAG techniques are crucial for overcoming the challenges of Naive RAG, such as inaccurate results, slow response times, and higher costs when dealing with complex queries and large datasets. By incorporating these advanced methods, you can significantly improve the performance, accuracy, and relevance of your AI-driven applications.

In this article, we’ve explored various advanced RAG techniques, including data pre-processing, optimized retrieval methods, and sophisticated generation strategies. Each of these approaches is key to ensuring that your RAG system is both efficient and capable of delivering contextually accurate responses. Whether it’s enhancing data quality before retrieval, fine-tuning queries, or implementing innovative approaches like GraphRAG, these strategies collectively help you build more robust and effective AI systems.

Tools and platforms like FalkorDB provide the necessary infrastructure to seamlessly implement these techniques. With FalkorDB’s support for knowledge graphs, integration with LLMs, and scalability, you have a powerful solution to optimize your RAG workflows.

To start implementing advanced RAG techniques with FalkorDB, launch it using a single-line Docker command or sign up for FalkorDB Cloud. You can then use the Python client or any of the other available clients to start building.