Highlights

- Implementing agentic memory with FalkorDB and LangChain allows AI agents to retain information, adapt responses, and provide more personalized outputs across interactions.

- FalkorDB integration with LangChain simplifies building AI agents with memory, combining graph database power with LLM capabilities for context-aware applications.

- GraphRAG, powered by FalkorDB, outperforms traditional vector stores in capturing complex relationships, enhancing AI agents' ability to understand and utilize context.

LangChain now integrates with FalkorDB, enabling graph database capabilities for AI-driven applications. This combination facilitates the creation of AI agents with memory, enhancing their ability to retain state and context across interactions.

Using Agentic Memory to Expand AI Capabilities

Agentic memory allows AI systems to:

- Retain information from past interactions

- Adapt responses based on accumulated knowledge

- Provide more personalized and context-aware outputs

This functionality extends AI applications beyond single-task execution, enabling more sophisticated and nuanced interactions.

Implementation with LangChain and FalkorDB

To create AI agents with memory using this stack:

- Set up LangChain and FalkorDB environments

- Define knowledge graph schema in FalkorDB

- Implement LangChain agents with FalkorDB as the memory store

- Configure retrieval and storage mechanisms for agent-database interaction

What is Agentic Memory?

Large language models (LLMs) are stateless, meaning they do not retain memory of previous interactions within a conversation unless explicitly provided with that context. Each response is generated solely based on the current input query, without modifying the model’s architecture or internal state. This lack of intrinsic memory necessitates external mechanisms, such as session-based context windows or agent memory frameworks, to ensure continuity and coherence in multi-turn conversations or sequential tasks.

Agentic memory refers to an AI agent’s ability to retain, recall, and utilize past interactions, context, or knowledge as it performs tasks and makes decisions. This capability is essential for creating AI systems that act consistently, adapt intelligently, and deliver personalized experiences over time. Without agentic memory, AI applications remain stateless, requiring users or developers to repeatedly provide the same context—reducing efficiency and limiting the system’s overall usefulness.

Integrating memory into AI applications allows agents to track ongoing conversations, learn user preferences, remember historical data, and adapt to dynamic environments while maintaining coherent, context-aware responses. This capability unlocks a broad spectrum of applications, from personalized customer service bots and advanced virtual assistants to sophisticated problem-solving agents in fields such as healthcare, education, and research. Agentic memory is a foundational step toward AI systems that are truly adaptive, context-aware, and capable of long-term autonomy.

Significance of Graph Databases for LLM-Powered Applications

Hallucination is a common problem faced by LLMs today. It occurs when an LLM generates information that appears plausible but is actually incorrect, misleading, or completely fabricated. Retrieval Augmented Generation (commonly called RAG) is an effective method to counter this issue by providing the necessary context along with the query. Here, the retrieved content from a retrieval system is used to prompt the LLM, so that the LLM generates factually correct responses.

Retrieval Using Semantic Search

There are various approaches to retrieval, with semantic search being one of the most prevalent. In semantic search, the retriever identifies content from the vector database that is semantically similar to the query and likely to be relevant. This is achieved by encoding both the query and the database content into vector embeddings, then using similarity search algorithms to determine the closest matches based on their positions in the vector space.

Why Semantic Search Often Fails

However semantic search often struggles to capture the complex relationships and interconnections between different pieces of information. Let’s take the query below as an example:

“What was the impact of policy changes on the supply chain performance of Company X in 2020?”

In the above query, vector similarity-based RAG will fail to retrieve the right data, as it primarily focuses on finding semantic matches within isolated documents. You need a more structured approach to retrieval in such scenarios.

Why Knowledge Graphs Fit Perfectly

Graph databases offer a powerful solution to this challenge by storing data in a network of nodes and edges that mirror how information is naturally connected. When integrated with RAG systems (GraphRAG), graph databases allow relationships to be explicitly mapped and analyzed, enabling the retrieval of not just directly relevant documents but also related concepts, prerequisites, and downstream implications that provide crucial context for the LLM.

In modern graph databases, Cypher queries are used to traverse through the knowledge graphs. For the above example, a corresponding Cypher query would be:

MATCH (policy:Policy)-[:AFFECTED]->(metric:SupplyChainMetric),

(company:Company {name: "Company X"})-[:HAS_METRIC]->(metric)

WHERE policy.year = 2020

RETURN policy.name AS Policy, metric.name AS Metric, metric.value AS Value

Here is an outline of what the query does:

- Matching policy changes (Policy nodes) that directly affect supply chain metrics (SupplyChainMetric nodes).

- Filtering only the metrics associated with “Company X” for the year 2020.

- Returning the relevant policy names, associated metrics, and their values for deeper analysis.

This enables GraphRAG to connect data that otherwise would have remained scattered in the case of a vector database.

In other words, graph databases, with their ability to store and query knowledge graphs, enable you to store knowledge in a structured manner. This leads to the retrieval being more accurate in scenarios where the query requires knowledge exploration.

But what if you wanted to combine semantic search (for retrieving similar data points) with graph queries (for fetching structured data) when building your AI applications? This is where FalkorDB shines—the graph database we will use to build the agentic AI system below.

FalkorDB's Support for Agentic AI

FalkorDB is a cutting-edge database solution that is ultra-low-latency and highly optimized for knowledge graph-powered applications. The underlying architecture provides a high-performance graph database with in-memory processing techniques and efficient memory utilization, which contributes to faster query execution and reduced latency compared to other graph databases.

Additionally, it supports various AI frameworks like LangChain, enhancing its robustness for building AI applications.

- Supports Knowledge Graph and Vector Search: FalkorDB’s unified structure allows for concurrent storage and querying of graph relationships and vector embeddings. This integration eliminates the need for multiple specialized databases, simplifying data architectures.

- Advanced Query Processing: The system employs algorithms to optimize queries that involve both graph connections and vector similarities, ensuring efficient execution of complex data retrievals.

- Robust Scalability: FalkorDB maintains rapid response times even as data volumes expand, making it suitable for evolving data needs and streaming data.

- Streamlined Operations: By combining graph and vector functionalities, FalkorDB reduces the complexity associated with managing and synchronizing separate database systems.

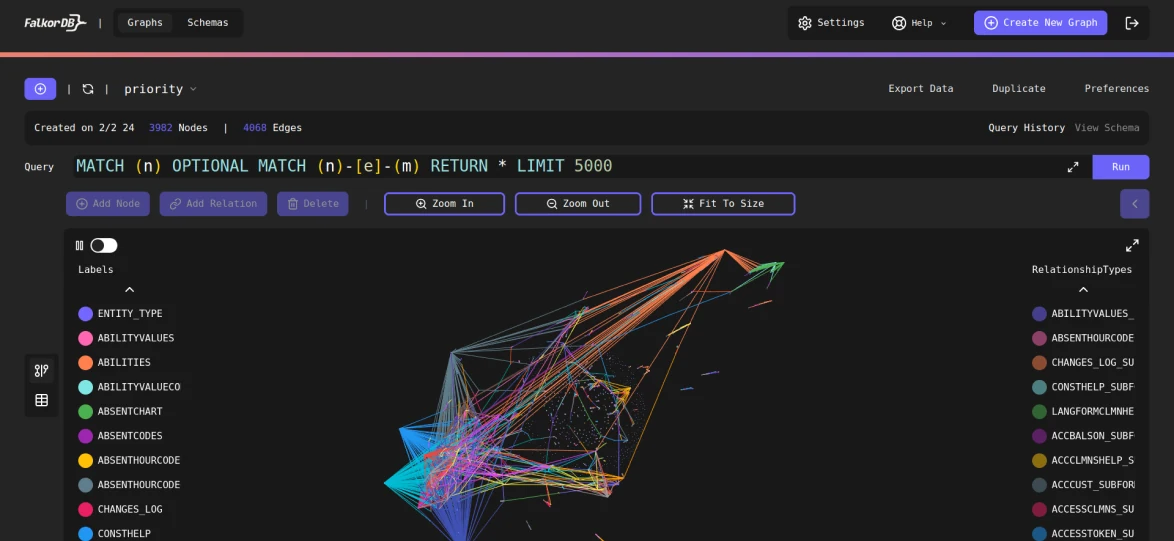

FalkorDB comes built-in with a graph browser, which allows you to explore the data and build explainable AI solutions.

LangChain Integration: Building Knowledge Graph-Powered AI

Integrating LangChain with FalkorDB has made it remarkably straightforward to build knowledge graphs. LangChain provides a vast collection of document loaders and chunking algorithms that you can now use to efficiently process and add data to your FalkorDB knowledge graph. This integration simplifies the combination of structured and unstructured data in your FalkorDB projects.

Here are some key features enabled by this integration:

Moreover, this integration simplifies the process of building AI chat applications powered by a graph database, which we will explore further in this article.

Optimizing AI-Driven Query Processing

key advantage of this integration is that you don’t need to write Cypher queries manually—LangChain automatically converts natural language queries into optimized Cypher queries and retrieves results in a single step. It leverages any LLM, such as OpenAI’s GPT-4o or Google’s Gemini models, to accomplish this.

The code snippet below demonstrates how easy it is to query the graph using LangChain:

from langchain.chains import FalkorDBQAChain

chain = FalkorDBQAChain.from_llm(llm=llm, graph=graph, verbose=True)

response = chain.run(input_user_query)

Opportunities for Developers and Data Scientists

The integration of LangChain with FalkorDB simplifies workflows for both developers and data scientists.

For Software Developers

FalkorDB empowers developers with a cutting-edge, ultra-low-latency graph database solution tailored for building powerful AI-driven applications.

With the ability to execute backend queries at lightning speed and support multiple graphs within a single instance, FalkorDB streamlines the development of advanced AI systems, including AI agents, Retrieval-Augmented Generation (RAG) workflows, and other context-aware applications. The database is easily scalable across clusters, ensuring robust performance for large-scale deployments.

Additionally, developers can leverage its built-in vector indexing and semantic search capabilities, combining the strengths of graph databases with modern AI integrations. With seamless LangChain integration, transitioning from existing databases like Neo4j to FalkorDB is straightforward, requiring minimal code changes and accelerating the development process.

For Data Scientists

FalkorDB provides data scientists with powerful tools for exploring and understanding complex, interconnected datasets.

The FalkorDB Browser enables detailed, interactive visualization of knowledge graphs, making it easy to navigate and analyze relationships within data. With full property graph support and compatibility with openCypher for complex query writing, FalkorDB improves data modeling and enhances analytical workflows.

Additionally, its ability to integrate semantic search and vector indexing allows data scientists to unlock deeper insights and patterns, going beyond traditional graph database functionalities. Whether you’re enhancing predictive models, refining data exploration processes, or developing innovative solutions, FalkorDB ensures a seamless and productive experience.

Building a Context-Aware Chatbot with Chat Message History

To further explore this integration, let’s build a context-aware chatbot that maintains chat message history using FalkorDB and LangChain.

To begin, you will need to set up FalkorDB locally or in the cloud.

Environment Setup

To set up FalkorDB on-premise or on a cloud server, ensure that Docker is installed. Then, run the following command to start FalkorDB:

docker run -p 6379:6379 -p 3000:3000 -it --rm falkordb/falkordb:edge

Alternatively, you can sign up to FalkorDB Cloud, create an account, and launch an instance. We will follow the former approach in this article.

Next, create a Python virtual environment, install Jupyter Lab and launch it.

$ pip install jupyterlab

$ jupyter lab

Once in the Jupyter environment, install the following libraries:

!pip install falkordb -q

!pip install langchain_community -q

!pip install langchain-experimental -q

!pip install langchain-openai -q

!pip install langchain-falkordb -q

Connecting to FalkorDB

Once FalkorDB is running, follow these steps to define and connect the graph database client.

import falkordb

from langchain_community.graphs.falkordb_graph import FalkorDBGraph

from google.colab import userdata

import json

from langchain_experimental.graph_transformers.llm import LLMGraphTransformer

from uuid import uuid4

# For Docker on localhost

graph = FalkorDBGraph(

database="MyGraph",

host="localhost",

port=6379,

username="",

password="",

)

#Or you can use this method too

client = FalkorDB(host="localhost", port=6379, password="")

graph = client.select_graph('MyGraph')

#For Cloud

graph = FalkorDBGraph(

host="xxxx.cloud",

username="your_username",

password="your_password",

port=52780,

database="MyGraph"

)

#Use any of these methods according to your requirements

Knowledge Graph Setup

To enable knowledge retrieval in the GraphRAG pipeline, we first need to set up the graph database. For simplicity, we are using a small dataset of text chunk.

# Define the data

text = """

Al Pacino, born on April 25, 1940, is an actor known for starring in movies such as 'The Godfather', 'The Godfather: Part II', and 'The Godfather Coda: The Death of Michael Corleone'.

Robert De Niro, born on August 17, 1943, also starred in 'The Godfather: Part II'.

Tom Cruise, born on July 3, 1962, is known for his role in 'Top Gun', alongside Val Kilmer, Anthony Edwards, and Meg Ryan.

Val Kilmer, born on December 31, 1959, appeared in 'Top Gun'.

Anthony Edwards, born on July 19, 1962, was part of the cast of 'Top Gun'.

Meg Ryan, born on November 19, 1961, also starred in 'Top Gun'.

The movies include:

- 'The Godfather' (featuring Al Pacino)

- 'The Godfather: Part II' (featuring Al Pacino and Robert De Niro)

- 'The Godfather Coda: The Death of Michael Corleone' (featuring Al Pacino)

- 'Top Gun' (featuring Tom Cruise, Val Kilmer, Anthony Edwards, and Meg Ryan).

"""

In practice, your dataset will likely be much larger and can be in any format suitable for your domain.

Next, we need to define the LLM to use. You can choose any advanced model, such as OpenAI’s GPT-4o or Google’s Gemini 1.5 Pro.

For this tutorial, we will use GPT 4 Turbo for both graph and context generation, as well as for the chat model.

Firstly we’ll make a helper_llm and a chat_llm from OpenAI for our use case. Then the LLMGraphTransformer class would automate the Cypher query generation using the helper LLM.

from langchain_openai import ChatOpenAI

from langchain_experimental.graph_transformers import LLMGraphTransformer

helper_llm = ChatOpenAI(

temperature=0,

model_name="gpt-4-turbo",

api_key = userdata.get("OPENAI_API_KEY")

)

chat_llm = ChatOpenAI(

temperature=0,

model_name="gpt-4-turbo",

api_key = userdata.get("OPENAI_API_KEY")

)

graph_transformer = LLMGraphTransformer(

llm = helper_llm

)

The langchain_core.documents creates a list containing a single Document object with text as its content. This is useful for processing text within LangChain’s document-handling workflows.

After all the text processing is done and converted into graph document – we’ll add the data to the graph database:

from langchain_experimental.graph_transformers import LLMGraphTransformer

from langchain_core.documents import Document

documents = [Document(page_content=text)]

data = graph_transformer.convert_to_graph_documents(documents)

if isinstance(data, list):

graph.add_graph_documents(data)

print("Graph Inserted")

else:

print("Error: Data format is incorrect. Expected a list of graph documents.")

You can use this utility to create a knowledge graph from a wide range of datasets.

Next, we need to create the retrieval and generation chain in LangChain. Below, we will show how to set up both.

Creating the Retrieval Chain

Create the retrieval chain in the following way. The data retrieved from the graph grounds the chat model by providing it with contextual information so that the LLM does not hallucinate during the generation process.

from langchain.chains import FalkorDBQAChain

graph.refresh_schema()

context_chain = FalkorDBQAChain.from_llm(

helper_llm,

graph=graph,

verbose=True,

allow_dangerous_requests=True

)

Creating the LLM Conversation Chain

Next, let us build the chain for the chat interface. We’ll use a unique session id to identify each conversation. You can generate a random uuid using the Python uuid4() from the uuid library.

SESSION_ID = str(uuid4()) |

Let’s also add a prompt template that ensures the LLM understands the context:

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

prompt = ChatPromptTemplate.from_messages([

("system",

"""

You are a meticulous research scientist analyzing laboratory conditions.

Respond using scientific terminology and maintain a professional, analytical tone.

Current laboratory measurements:

{context}

"""

),

MessagesPlaceholder(variable_name="chat_history"),

("human", "{question}"),

])

Now you can set up the chat workflow chain in LangChain in the following way:

from langchain.schema import StrOutputParser

chat_chain = prompt | chat_llm | StrOutputParser()

Adding Memory to the Agent

To add memory to the chatbot, we will need to define a memory component and a function that returns it whenever required by the chain.

from langchain_falkordb.message_history import (

FalkorDBChatMessageHistory,

)

def get_memory(session_id):

return FalkorDBChatMessageHistory(session_id=session_id, graph=graph)

Wrap the chain in a Runnable component to finalize the flow—and we’re good to go.

from langchain_core.runnables.history import RunnableWithMessageHistory

chat_with_message_history = RunnableWithMessageHistory(

chat_chain,

get_memory,

input_messages_key="question",

history_messages_key="chat_history",

)

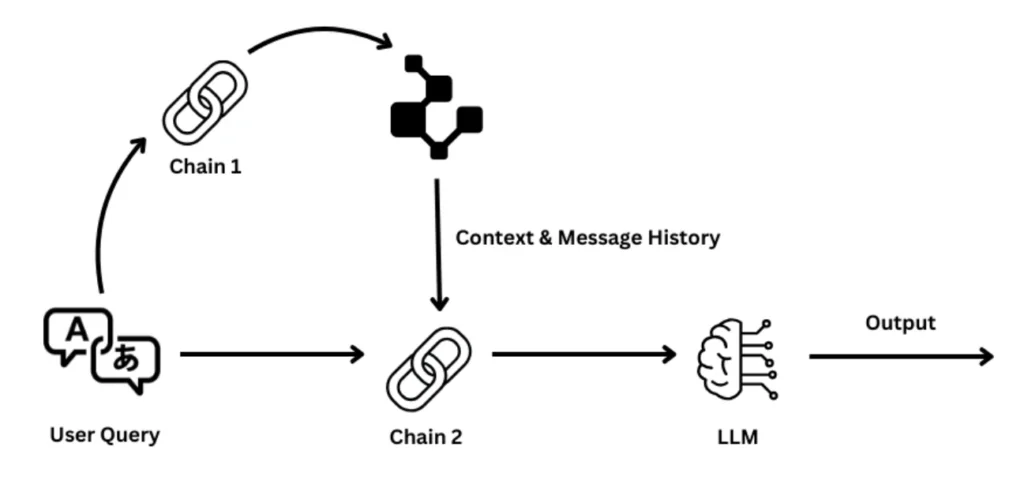

Below is a bird’s-eye view of how queries and data flow under the hood.

Try this out in a chat session using a simple while loop.

if __name__ == "__main__":

print("Starting Context-Aware Chatbot...")

# Ensure FalkorDB connection is established

graph.refresh_schema()

# Start chat session

while True:

try:

question = input("\nYou: ")

if question.lower() in ["exit", "quit", "bye"]:

print("Chatbot: Goodbye!")

break

# Invoke the context chain

context = context_chain.run(question)

# Generate a session ID at the start at each go

SESSION_ID = str(uuid4())

# Invoke the chatbot with history

response = chat_with_message_history.invoke(

{

"context": context,

"question": question,

},

config={

"configurable": {"session_id": SESSION_ID}

}

)

print(f"Chatbot: {response}")

except Exception as e:

print(f"Error: {e}")

The Results

Starting Context-Aware Chatbot...

You: Robert De Niro played in which movies?

> Entering new FalkorDBQAChain chain...

Generated Cypher:

cypher

MATCH (p:Person)-[:STARRED_IN]->(m:Movie)

WHERE p.id = "Robert De Niro"

RETURN m.id

Full Context:

[['The Godfather: Part Ii']]

> Finished chain.

Chatbot: Robert De Niro, an acclaimed actor, has an extensive filmography that spans several decades. Some of his notable films include:

1. **"Taxi Driver" (1976)** - Directed by Martin Scorsese, this film features De Niro in the iconic role of Travis Bickle, a mentally unstable veteran who becomes a taxi driver in New York City.

2. **"Raging Bull" (1980)** - Another collaboration with Martin Scorsese, De Niro plays Jake LaMotta, a professional boxer whose self-destructive and obsessive rage, sexual jealousy, and animalistic appetite destroyed his relationship with his wife and family.

3. **"Goodfellas" (1990)** - In this crime film, also directed by Scorsese, De Niro portrays James Conway, a mobster involved in the Lufthansa heist.

4. **"Casino" (1995)** - De Niro plays Sam "Ace" Rothstein, a gambling expert who oversees the day-to-day operations of a casino in Las Vegas, which is based on the life of Frank Rosenthal.

5. **"Heat" (1995)** - Directed by Michael Mann, De Niro stars as Neil McCauley, a professional thief who is trying to control his personal and professional life while being chased by a determined detective, played by Al Pacino.

6. **"The Intern" (2015)** - A more recent role, De Niro plays Ben Whittaker, a 70-year-old widower who becomes a senior intern at an online fashion retailer.

7. **"Silver Linings Playbook" (2012)** - In this film directed by David O. Russell, De Niro plays Patrizio "Pat" Solitano, Sr., father to the protagonist, struggling to manage his own temper and his son's bipolar disorder.

This list is not exhaustive but highlights some of the key films in Robert De Niro's career, showcasing his versatility as an actor across various genres and roles.

You: Who played in Top Gun?

> Entering new FalkorDBQAChain chain...

Generated Cypher:

cypher

MATCH (p:Person)-[:STARRED_IN]->(m:Movie)

WHERE m.id = "Top Gun"

RETURN p.id

Full Context:

[['Tom Cruise'], ['Val Kilmer'], ['Anthony Edwards'], ['Meg Ryan']]

> Finished chain.

Chatbot: In the 1986 film "Top Gun," the primary cast members included Tom Cruise as Lieutenant Pete "Maverick" Mitchell, Val Kilmer as Lieutenant Tom "Iceman" Kazansky, Anthony Edwards as Lieutenant (junior grade) Nick "Goose" Bradshaw, and Meg Ryan as Carole Bradshaw.

You: exit

Chatbot: Goodbye!

That’s it! In your application, you will maintain a session ID for each chat session. By following this pattern, you can build agentic applications that retain history and leverage context from a knowledge base.

Getting Started with FalkorDB and LangChain

The integration of FalkorDB with LangChain makes building AI agents with memory easier than ever, leveraging knowledge graphs for context-aware applications. Whether you’re developing intelligent chatbots, AI-powered research assistants, or complex retrieval-augmented generation (RAG) workflows, this powerful combination enables deeper and more structured AI capabilities.

Now it’s your turn—start building with FalkorDB and LangChain! Set up FalkorDB, connect it to LangChain, and create AI solutions that retain context, understand relationships, and deliver more intelligent responses.

References

How does FalkorDB's integration with LangChain improve GraphRAG performance?

Can FalkorDB replace traditional vector stores in existing Langchain projects?

What are the key considerations when migrating from other vendors to FalkorDB in a LangChain-based application?

Build fast and accurate GenAI apps with GraphRAG SDK at scale

FalkorDB offers an accurate, multi-tenant RAG solution based on our low-latency, scalable graph database technology. It’s ideal for highly technical teams that handle complex, interconnected data in real-time, resulting in fewer hallucinations and more accurate responses from LLMs.