Highlights

- Graph clustering algorithms partition complex networks into meaningful clusters, revealing hidden relationships. Choose methods based on graph size, structure, and analysis goals.

- Hierarchical clustering excels for interpretability, modularity-based for community detection, and label propagation for massive graphs. Consider trade-offs in complexity and scalability.

- Advanced techniques like spectral clustering and GNN-based methods offer powerful solutions for weighted graphs and dynamic network structures.

Today, knowledge graphs have emerged as a powerful framework for organizing and linking information. By visualizing relationships between entities, they unlock deeper insights, enhance decision-making, and fuel applications like semantic search, recommendation systems, and AI-driven analytics.

At the heart of knowledge graph analysis lies graph clustering algorithms—essential tools for uncovering hidden patterns and relationships within complex networks. These algorithms break down large graphs into smaller, more meaningful clusters based on criteria like edge density or node similarity. Their applications span diverse domains, from social network analysis to bioinformatics, making it easier to interpret and extract value from vast datasets.

In this article, we’ll get into research on graph clustering algorithms, explore their underlying mechanisms, and highlight their real-world impact.

Graph Clustering Explained

Let’s first take a high-level look at what graph clustering means.

Graph clustering is the process of partitioning a graph into distinct clusters, where nodes within the same cluster exhibit higher similarity or stronger connectivity than those in different clusters. This approach operates on the principle that closely connected nodes often share common properties or roles.

For instance, in a social network graph, individuals (nodes) who interact frequently (edges) are likely to form clusters representing friend groups or communities. The key objective of graph clustering is to maximize intra-cluster edge density—ensuring that nodes within a cluster are tightly connected—while minimizing inter-cluster connections, reducing links between separate groups.

Applications of Graph Clustering Algorithms

Since graph clusters help identify network clusters, they find applications in a number of domains. Here are some:

1. Social Networks

Graph clustering helps identify communities, such as friend groups or professional networks. This information is valuable for recommendation systems, targeted advertising, and understanding the spread of information or influence within a network.

Real-Life Uses:

- It can help detect influencer communities for marketing campaigns on platforms like Instagram or Twitter.

- Identifying groups of users with shared interests for personalized content delivery on streaming services.

- Analyzing social media graphs to track the spread of misinformation and design intervention strategies.

2. Bioinformatics

In biological networks, graph clustering is used to identify protein complexes, gene clusters, or functional modules, enabling insights into cellular processes. By clustering genes or proteins based on interaction patterns, researchers can predict functions of uncharacterized genes, understand disease mechanisms, and identify potential therapeutic targets.

Real-Life Uses:

- It can help map gene expression data to discover biomarkers for specific diseases, such as cancer.

- Clustering protein interaction networks to predict protein functions or identify drug targets.

- Analyzing metabolic networks to study pathways affected in genetic disorders.

3. Recommendation Systems

E-commerce platforms use graph clustering to group users with similar preferences, enhancing personalized recommendations. By analyzing user-item interaction graphs, these systems can suggest products that similar users have liked, thereby increasing user engagement and sales.

Real-Life Uses:

- It can help group shoppers on Amazon to provide targeted product recommendations based on past purchases.

- Enhancing movie recommendations on Netflix by clustering users with similar viewing histories.

- Optimizing music playlist suggestions on Spotify by analyzing user preferences and listening patterns.

4. Transportation Networks

Clustering algorithms optimize traffic flow and route planning by identifying patterns in transportation graphs.

Real-Life Uses:

- It can help identify traffic bottlenecks in urban areas and optimize city traffic management systems.

- Grouping train or bus stations with high passenger interchange rates to design better transit schedules.

- Analyzing delivery networks for logistics companies like FedEx to improve package routing efficiency.

Common Graph Clustering Methods

Graph clustering methods vary based on the structure and properties of the graph. The choice of the algorithm depends on factors such as graph size, edge density, node attributes, and connectivity patterns.

Below, we have listed some of the key clustering methods and the methodology they use:

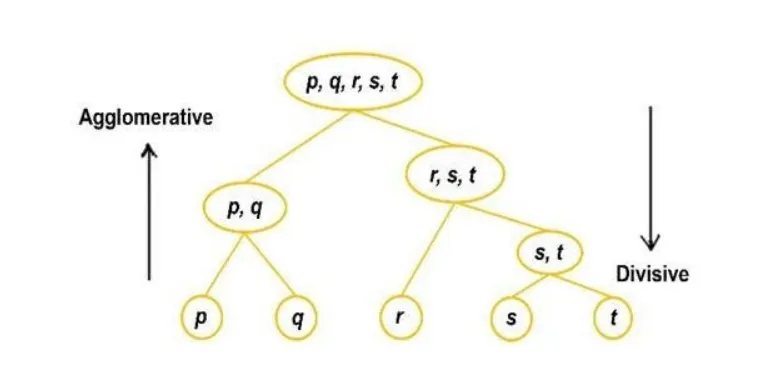

Hierarchical Clustering

Hierarchical clustering groups nodes into a tree-like structure based on their connectivity or similarity. Unlike flat clustering methods, it provides a nested hierarchy of clusters, making it useful for applications where multi-level clustering is required.

This method follows two main approaches:

Agglomerative:

- Each node starts as its own cluster.

- The algorithm iteratively merges the two most similar clusters based on a similarity measure (e.g., shortest path, edge weight, or connectivity).

- This merging continues until all nodes belong to a single cluster or a predefined number of clusters is reached.

- Example similarity measures include single linkage (minimum distance), complete linkage (maximum distance), and average linkage.

Divisive:

- The entire graph is initially treated as a single cluster.

- It is recursively split into smaller clusters using a graph partitioning technique (e.g., min-cut algorithms).

- The process stops when each cluster meets a stopping criterion, such as a maximum number of clusters or minimum intra-cluster similarity.

Modern large-scale hierarchical clustering methods use:

- Sparse similarity matrices to reduce memory usage.

- Approximate nearest neighbor (ANN) techniques to avoid computing all pairwise similarities.

- Parallel and distributed implementations (e.g., Google’s trillion-edge clustering method) to handle massive datasets efficiently.

Scaling hierarchical clustering for trillion-edge graphs requires advanced methods such as graph sparsification, scalable linkage strategies, and distributed computing frameworks (Google Blog, 2024).

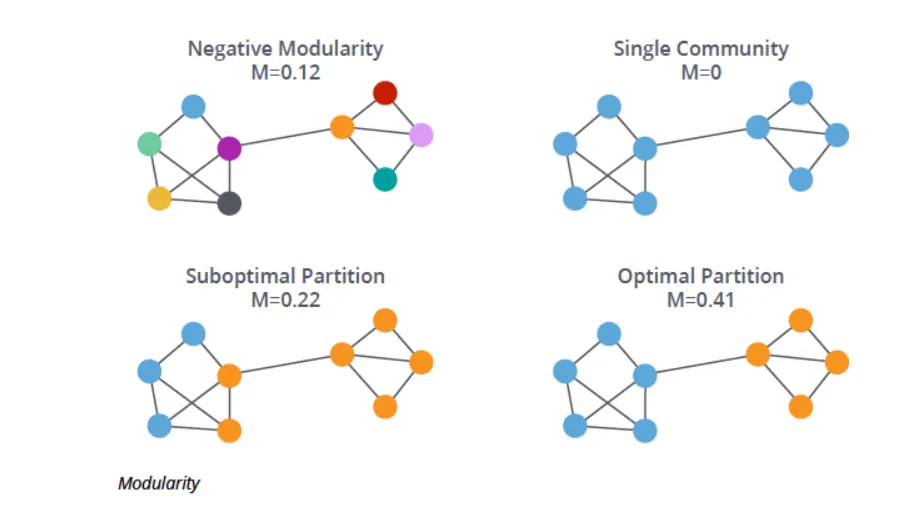

Modularity-Based Algorithms

Modularity-based algorithms aim to optimize modularity, a metric that evaluates the quality of graph partitions. For instance, the Girvan-Newman algorithm identifies communities by iteratively removing edges with the highest betweenness centrality, effectively revealing the underlying structure of the graph.

Here are some well-known modularity-based community detection algorithms:

- Louvain Method

As one of the most popular and efficient algorithms for community detection, it employs a hierarchical approach to optimize modularity in a greedy manner. Known for its speed and scalability, it is well-suited for analyzing large networks. - Girvan-Newman Algorithm

This method detects communities by iteratively removing edges with the highest betweenness centrality. While effective at identifying community structures, it is computationally expensive and does not scale well to large networks. - Newman’s Fast Algorithm (Newman-Girvan)

This method is an extension of the Girvan-Newman algorithm and optimizes modularity more efficiently. It uses a greedy approach for dividing the graph into communities by examining the modularity score of different partitions. - Blondel et al. (Louvain-like) Algorithm

This algorithm builds on the Louvain method but uses a refinement step where nodes are first aggregated into communities, and then the modularity is optimized for the new, coarser graph. - Fast Modularity Optimization (FM) Algorithm

A faster variant of modularity optimization, this algorithm leverages local moves and community merging to enhance the speed of the Louvain algorithm, making it more suitable for very large graphs.

A detailed exploration of modularity optimization for scalable signed graph clustering can be found in the work of Hausberger et al., 2022.

Label Propagation

Label Propagation (LP) is a simple, efficient, and scalable iterative algorithm primarily used for community detection in networks, though it can also be applied to tasks like node classification and clustering.

In this algorithm, each node initially holds a unique label, and during each iteration, nodes adopt the most frequent label from their neighbors. This process continues until the labels stabilize and no further changes occur. Label propagation is widely used for large-scale graphs due to its speed and ability to handle vast networks, making it a popular choice for both unsupervised and semi-supervised learning tasks.

Summarizing the Fundamental Concepts

Graph Representation

A graph G = (V, E) consists of nodes (V), edges (E), and optionally, weights (w) assigned to the edges.

Labels

Each node starts with a label:

Propagation

During each iteration, a node adopts the most frequent label among its neighbors, following the principle that nodes in the same cluster should share the same label.

Convergence

The process stops when labels no longer change or when a predefined number of iterations is reached.

Advanced Graph Clustering Methods

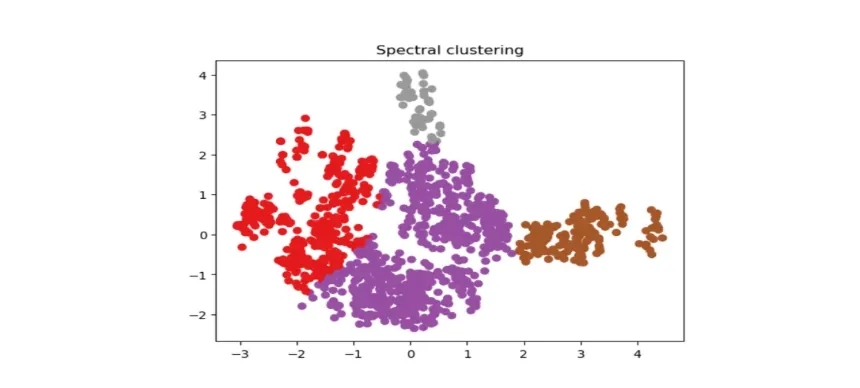

Spectral Clustering

Spectral clustering is a technique used to group similar entities (in this case, nodes in a graph) into clusters or groups. Instead of relying solely on direct connections between nodes, it utilizes the Laplacian matrix, which summarizes the graph and mathematically represents how nodes are connected.

Here’s how it works in simple terms:

- Spectral clustering converts a graph into a matrix and analyzes its eigenvalues—special numbers that capture the structure of the graph.

- These eigenvalues help the algorithm understand the overall “shape” of the graph.

- Based on this information, the algorithm groups similar nodes into clusters.

It’s especially helpful for complex graphs where the structure isn’t straightforward. For example, in a sparse graph (where many nodes aren’t directly connected), spectral clustering can still identify meaningful groups by considering indirect relationships between nodes.

In short, spectral clustering uses mathematics—specifically the Laplacian matrix and eigenvalues—to group similar nodes, even if they aren’t directly connected. This makes it a powerful tool for understanding complex networks.

Edge Betweenness Clustering

This method is a way of finding communities or groups in a network (like people in a social network or devices in a communication network). It works by looking at the edges (connections between nodes) and focusing on those that are the most important in terms of “bridging” different parts of the graph. This importance is measured using betweenness centrality, which tells us how often a particular edge acts as a shortcut or bridge between different groups of nodes.

Here’s how the method works step by step:

- Betweenness centrality identifies edges that are “critical” for connecting different parts of the graph.

- Edges with high betweenness are removed one by one, essentially cutting the graph into pieces.

- By repeating this process, the graph gradually breaks into smaller clusters or communities where the nodes within each cluster are more connected to each other than to nodes in other clusters.

This approach is great for finding community structures in networks, where we want to discover groups of nodes that are more tightly connected internally but less so with other groups. It’s especially useful when you want to detect structural communities where nodes are interconnected in complex ways.

A more detailed explanation can be found in the work of UIowa, 2023.

Graph Neural Network-Based Clustering

Graph Neural Networks (GNNs) are primarily used for node classification, edge prediction, and graph classification, but they can also be adapted for unsupervised clustering. While GNNs do not directly perform clustering, their node embedding techniques provide representations that can be clustered using traditional methods like k-means or advanced techniques like contrastive clustering.

Several deep graph clustering approaches leverage GNNs:

- Graph Autoencoders (GAE, VGAE) – These use GNN encoders to learn latent node representations, which can then be clustered using spectral or k-means clustering.

- Contrastive Graph Clustering – Techniques like AGE, MVGRL, and HeCo refine node embeddings using contrastive learning, making clusters more separable.

- Graph Pooling for Clustering – Methods like DiffPool and MinCutPool aggregate node features to create hierarchical cluster structures within the graph.

- Encodes complex graph structures into meaningful node embeddings.

- Works well for large, dynamic graphs, where relationships evolve over time.

- Can be combined with clustering methods to identify communities or functional modules in networks.

Choosing the Right Graph Clustering Algorithm

Domain-Specific Factors

Selecting an algorithm often depends on the specific domain and its requirements. For example, in bioinformatics, modularity-based algorithms may be preferable for detecting functional modules.

- Hierarchical Clustering: Suitable for smaller datasets or applications where interpretability is critical, such as biological datasets.

- Modularity-Based Algorithms: Effective for community detection in social networks and bioinformatics.

- Label Propagation: Scales well for large graphs (millions to billions of nodes) like web graphs, where nodes are web pages and edges are hyperlinks. It is used in spam detection and topic classification.

- Spectral Clustering: Captures complex cluster structures in sparse or weighted graphs, such as transportation networks, where traffic flows link stations.

- Edge Betweenness Clustering: Detects well-separated communities in smaller networks (up to tens of thousands of nodes), like academic or corporate networks.

A survey on graph clustering highlights the importance of domain-specific criteria in algorithm selection (UIowa, 2023).

Technical Factors

Technical considerations like computational efficiency, scalability, and compatibility with hardware resources also influence the choice. For instance, hierarchical clustering can be computationally expensive for large graphs, making label propagation a better alternative for scalability.

Additionally, the complexity of the graph structure matters. Sparse graphs might benefit from spectral clustering, while dense graphs could be better handled by modularity-based algorithms. Another critical aspect is whether the algorithm should work in an unsupervised manner or leverage pre-existing labels (semi-supervised learning).

Factors to consider:

- Computational Efficiency: Spectral and modularity-based methods can be computationally intensive for very large graphs. Label propagation offers better performance in these cases.

- Memory Requirements: Node embedding methods require significant memory for vector storage but provide richer results.

- Complexity of Graphs: Spectral clustering excels in handling sparse or weighted graphs, while modularity-based methods are more suited for undirected graphs.

- Hierarchical Clustering:

- Struggles with scalability for large datasets due to high computational overhead.

- Offers high interpretability, making it suitable for small, structured datasets.

- Requires significant processing time for densely connected graphs.

- Modularity-Based Algorithms:

- Computationally intensive for larger graphs, especially with high-edge density.

- Works well for undirected graphs with clear community structures.

- Less suitable for weighted or directed graphs without modifications.

- Label Propagation:

- Scales efficiently for very large datasets.

- Simple to implement and computationally lightweight.

- Lacks fine-grained control over cluster size and boundaries.

- Spectral Clustering:

- Computationally expensive due to eigenvalue decomposition.

- Effective for sparse and weighted graphs with complex structures.

- Requires a predefined number of clusters, limiting adaptability for exploratory tasks.

- Edge Betweenness Clustering:

- Inefficient for large-scale graphs due to the need for recalculating edge centrality.

- Excels in detecting well separated, distinct communities.

- Not ideal for dense or highly interconnected graphs.

Practical considerations

Graph Size

Algorithms like label propagation are efficient for very large graphs.

Result Interpretability

Modularity-based approaches often yield clusters that are easy to interpret.

Data Characteristics

For weighted or directed graphs, specialized algorithms may perform better.

Scalability Needs

For real-time applications, computational efficiency becomes paramount.

Ease of Implementation

Label propagation is straightforward to implement and interpret, making it practical for quick clustering.

Interpretability

Hierarchical clustering provides a clear structure that is easy to explain, while modularity-based approaches also offer good interpretability.

Specialized Use Cases

Spectral clustering is advantageous for weighted or directed graphs, whereas GNNs are versatile for applications requiring dynamic adaptability.

Summary

Graph clustering algorithms play a crucial role in uncovering intricate relationships within interconnected systems. Each of the seven clustering methods offers unique strengths, catering to diverse requirements across domains, technical constraints, and practical applications. By carefully evaluating domain-specific factors, technical requirements, and practical considerations, practitioners can select the most suitable algorithm to address their specific challenges.

In this article, we have explored how to effectively leverage these clustering methods, highlighting the strengths and limitations of each approach in different scenarios. This knowledge enables better decision-making when analyzing complex networks, driving innovation and actionable insights in fields such as bioinformatics, social networks, recommendation systems, and more.

Future Scope

As graph data continues to grow in complexity and scale, innovations such as Graph Neural Networks (GNNs) are expected to lead the way in addressing advanced clustering challenges. Researchers and developers are encouraged to explore the diverse range of graph clustering techniques to gain deeper insights and drive impactful solutions across industries.

For a comprehensive understanding of current methods and future trends, the survey “A Survey of Deep Graph Clustering” (Liu et al., 2022) serves as an excellent resource.

What are the main types of graph clustering algorithms?

How do I choose the right graph clustering algorithm?

What are the applications of graph clustering algorithms?

Build fast and accurate GenAI apps with GraphRAG SDK at scale

FalkorDB offers an accurate, multi-tenant RAG solution based on our low-latency, scalable graph database technology. It’s ideal for highly technical teams that handle complex, interconnected data in real-time, resulting in fewer hallucinations and more accurate responses from LLMs.