Highlights

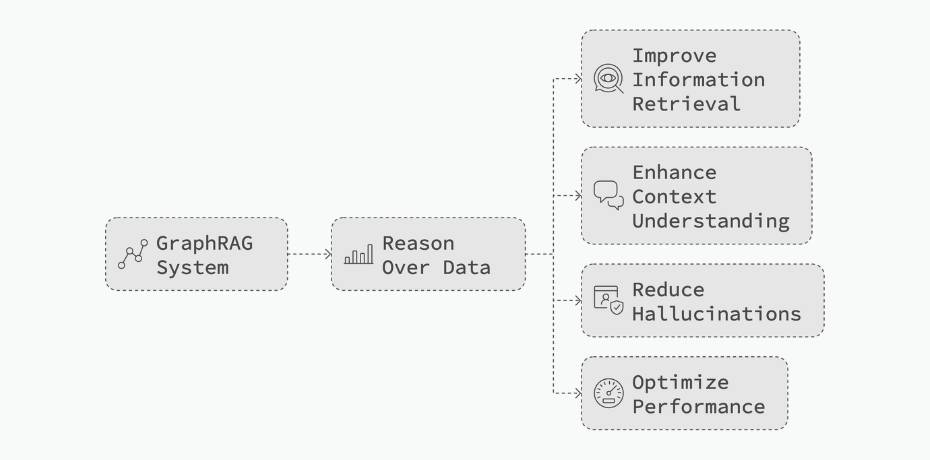

- GraphRAG combines LLMs with graph databases to enhance context and reduce hallucinations, enabling more accurate responses by leveraging structured knowledge representation alongside semantic search.

- FalkorDB's ultra-low latency supports both graph queries and vector embedding-based semantic search, making it ideal for GraphRAG workflows that require exploring complex data relationships.

- LangGraph's state management and LangChain's integration capabilities enable sophisticated agentic workflows that dynamically route queries between vector search and graph exploration.

The combination of large language models (LLMs) and low-latency graph databases has grown massively in popularity, improving data-driven workflows. By combining the reasoning capabilities of LLMs with the structured knowledge representation of graphs, you can build robust, hallucination-resistant AI systems.

GraphRAG, or Graph-driven Retrieval-Augmented Generation, is one such approach, where knowledge stored in graph databases enhances LLM context, leading to more accurate responses. Beyond retrieval, GraphRAG enables the development of sophisticated AI agents capable of complex reasoning and nuanced output generation.

In this guide, you’ll learn how to implement a GraphRAG workflow using LangChain and LangGraph, with FalkorDB as the graph database. You’ll leverage techniques such as dynamic prompting and query decomposition, integrating results from both vector search and the knowledge graph through routing methods. Additionally, we’ll look into how LangGraph’s GraphState helps maintain context across steps, enabling enriched, context-aware responses.

Query Types

The common approach to building Retrieval-Augmented Generation (RAG) uses vector embedding-based semantic search. This works well when you are looking for data that is semantically similar.

For example, you can use it to retrieve relevant customer support articles when a user asks, “How do I reset my password?”

However, though useful, semantic search fails when your query involves exploring relationships within data.

For instance, let’s say you’re building a chatbot for a customer support team. If a customer asks, “Why is my internet speed so slow?”, a traditional RAG system might retrieve documents containing keywords like “slow internet,” “speed issues,” and “network congestion.”

However, it might fail to:

- Identify the customer’s specific location: Check for local outages or service disruptions.

- Determine the type of internet connection: Pinpoint potential issues related to fiber, cable, or DSL connections.

- Trace the service path: Identify potential points of failure along the network route from the customer’s premises to the internet backbone.

These types of relationships and dependencies are crucial for accurate diagnosis and resolution of the customer’s issue. A graph database, with its ability to represent and query complex relationships, can effectively address these limitations.

So in many scenarios, you may be better off combining semantic search and graph queries, leveraging a Hybrid approach.

Graph Retrieval

Graph queries enable you to search for specific information by tracing relationships between data points. Think of it as uncovering connections—such as identifying who wrote a book or what topics a paper covers.

For example, if your data contains information about actors, their movies, etc, your queries can be like:

“MATCH (d:Director {name: 'Christopher Nolan'})-[:DIRECTED]->(m:Movie) RETURN m.title”

“MATCH (m:Movie)-[r]->(d:Director) RETURN m, r, d”

With FalkorDB, you can quickly and easily build knowledge graphs and run graph queries, allowing you to explore relationships in your data with minimal effort.

Graph Retrieval

FalkorDB also supports vector index and semantic search. This allows users to perform advanced searches for contextually similar data, such as finding documents or records that match the intent of a query. The vectors enable you to perform operations like approximate nearest neighbor searches using metrics such as Euclidean distance and cosine similarity.

Hybrid Query

A hybrid query is the best of both worlds—it combines graph queries along with semantic searches. This method helps you find precise relationships while also looking for broader, meaningful connections.

For example:

- Find all directors who direct crime movies.

- Find all actors who have acted in movies related to space.

In both queries, you need to combine graph traversal with semantic search to obtain an accurate answer. Since FalkorDB supports both graph queries and vector embedding-based semantic search, you can easily build applications that leverage both. Here’s how you can combine them::

- Store relationships and metadata in a graph structure.

- Store embeddings and create vector indexes.

- Use a hybrid query system where the graph traversal limits the dataset and the vector query ranks or filters the results further.

Let’s now take a quick look at LangGraph and LangChain, the two other components of our workflow.

Understanding LangGraph and LangChain

In this advanced agentic GraphRAG guide, we’ll use LangChain to interact with LLMs, datasets, and FalkorDB. To orchestrate the underlying logic flow, we’ll leverage LangGraph. Let’s explore how these two frameworks work.

LangChain

LangChain is an open-source framework designed to streamline the development of LLM-powered applications. It provides a suite of tools and integrations, allowing you to build context-aware, reasoning-driven applications that interact with various data sources and APIs.

One of LangChain’s biggest advantages is its seamless integration with a wide range of data sources. This makes it easy to combine LLMs with existing datasets, enabling tasks such as retrieving relevant documents, analyzing structured data, and enriching queries with external knowledge.

LangGraph

LangGraph is an open-source framework for building stateful, multi-actor agentic applications using LLMs. It enables you to design complex agent and multi-agent workflows by representing application logic as directed graphs—where nodes define tasks or functions, and edges determine the flow of information.

This graph-based approach gives you fine-grained control over both execution flow and state management. Additionally, LangGraph’s central persistence layer supports features such as memory retention, human-in-the-loop interactions, and powerful streaming capabilities.

GraphRAG Workflows with LangGraph and LangChain

Let’s now explore our application design. In this workflow, LLMs determine the appropriate processing path for a user query—directing it first to vector search and then to a knowledge graph query if a hybrid query is required, or directly to graph queries if the query involves only knowledge graph exploration.

LangChain handles interactions with the dataset, LLM, and FalkorDB. Meanwhile, LangGraph models the logic flow as a graph, where nodes represent individual tasks, functions, or operations, and edges define the flow of information between them.

The advantage of this approach is its flexibility—you can incrementally increase complexity based on the query type by adding more nodes and edges.

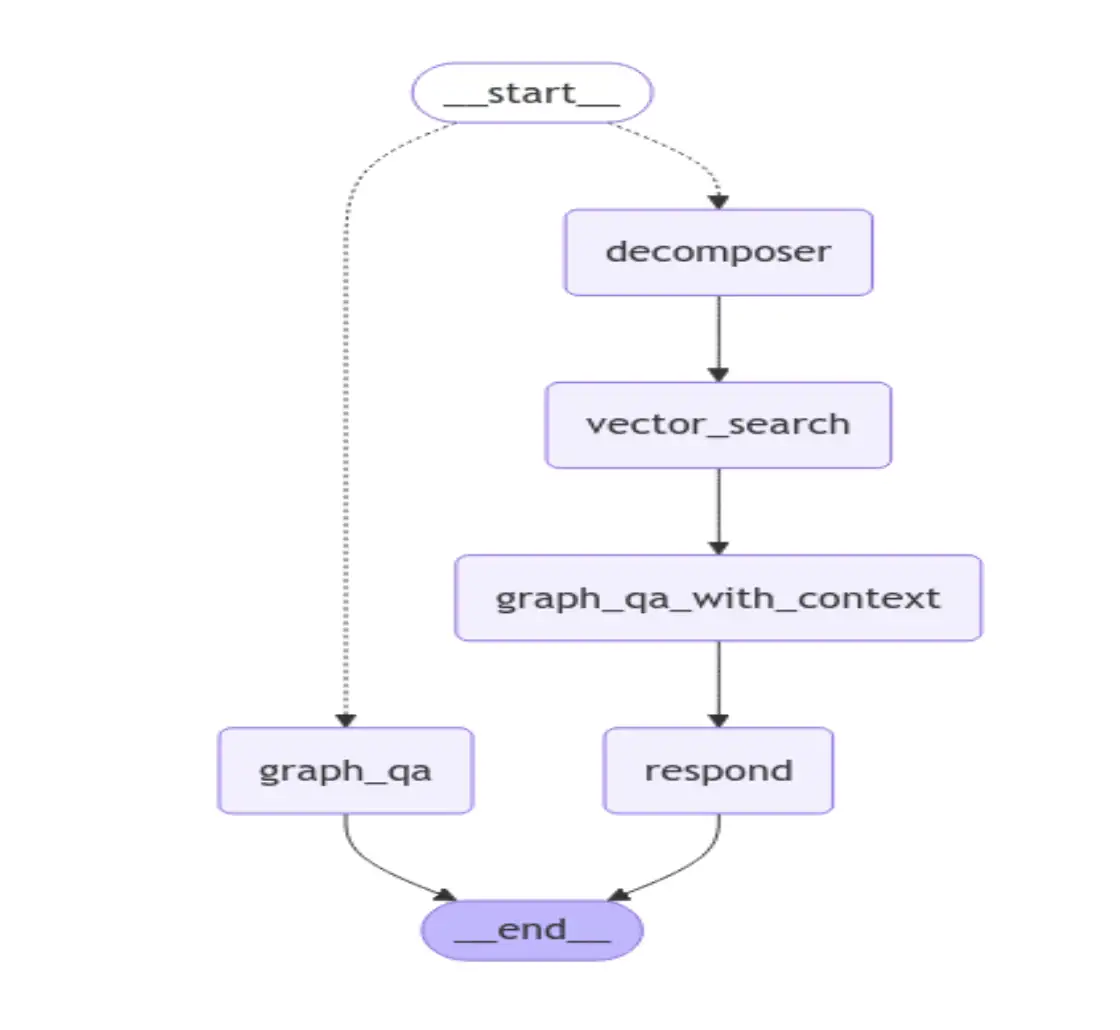

Here’s what our workflow looks like:

Starting Point

route_question: This is the initial step that routes the user's question to either the decomposer or graph_qa node based on the condition defined in the workflow. If the question requires more detailed decomposition, it moves to decomposer; otherwise, it routes directly to graph_qa for graph-based questioning.

Nodes

- decomposer:

- The node decomposes the input question into subqueries for further processing. This step is designed for cases where the question needs to be analyzed or split before retrieving relevant information.

- vector_search:

- After decomposer, the next step is vector_search. Here, a vector similarity search is performed to retrieve the relevant documents based on a similarity measure.

- graph_qa_with_context:

- In this node, the context retrieved from vector search is used to additionally query the graph database, so that the eventual responses are more accurate.

- respond:

- This final node generates the natural language response to the user’s question by using an LLM. It combines the user’s question with the relevant context and produces a detailed explanation or answer based on the provided context.

- graph_qa:

- This node is used when the question is routed directly to graph_qa. It uses a Graph QA Chain to query the FalkorDB graph database. It answers the user’s question by leveraging the structure and context of the graph database.

Implementing GraphRAG Workflow

We need to first install the necessary components.

Setting Up FalkorDB

You can install FalkorDB using Docker, or sign up to FalkorDB cloud for a managed instance. Here’s how you can install it using Docker:

docker run -p 6379:6379 -p 3000:3000 -it --rm -v ./data:/data falkordb/falkordb:edge

That will launch the FalkorDB server. You can also visit http://localhost:3000 to view the FalkorDB Browser.

Installing Libraries

Let’s now launch the Jupyter Notebook and install the necessary libraries. First, create a Python virtual environment and then install and launch Jupyter Lab:

$ pip install jupyterlab

$ jupyter lab

This will launch the Jupyter Notebook. We will use Groq to access the LLMs, so you need to procure an API key.

You can install the following libraries:

! pip install falkordb

! pip install groq

! pip install sentence-transformers llama-index-embeddings-huggingface langchain-core langgraph

! pip install falkordb langchain-experimental pandas langchain-groq

Loading Data

We will use the IMDB top 1000 dataset from Kaggle. Download the dataset and save it as a CSV file.

Now here’s how you can load the dataset into a data variable using LangChain’s CSVLoader:

from falkordb import FalkorDB

import os

import sys

import logging

import pandas as pd

from langchain_community.document_loaders.csv_loader import CSVLoader

logging.basicConfig(stream=sys.stdout, level=logging.INFO)

# Connect to FalkorDB

db = FalkorDB(host='localhost', port=6379)

# Create the graph

graph = db.select_graph('imdb')

filename = os.path.join(os.getcwd(), 'imdb_top_1000.csv')

data = pd.read_csv(filename)

We can create the graph programmatically from the data loaded in the following way:

import os

import pandas as pd

# Define a function to clean up each column

import re

import pandas as pd

def clean_column(column_data):

return column_data.apply(lambda x: re.sub(r"[^a-zA-Z0-9\s]", "", x) if pd.notnull(x) else 'NA')

# Apply the cleanup function to all necessary columns

data['Series_Title'] = clean_column(data['Series_Title'])

data['Director'] = clean_column(data['Director'])

data['Certificate'] = clean_column(data['Certificate'])

data['Runtime'] = clean_column(data['Runtime'])

data['Genre'] = clean_column(data['Genre'])

data['Overview'] = clean_column(data['Overview'])

data['IMDB_Rating'] = data['IMDB_Rating'].fillna('NA')

data['Released_Year'] = pd.to_numeric(data['Released_Year'], errors='coerce').fillna(-1)

star_columns = ['Star1', 'Star2', 'Star3', 'Star4']

for column in star_columns:

data[column] = clean_column(data[column])

for index, row in data.iterrows():

movie_title = row['Series_Title']

director_name = row['Director']

certificate = row['Certificate']

runtime = row['Runtime']

genre = row['Genre']

imdb_rating = row['IMDB_Rating']

description = row['Overview']

released_year = int(row['Released_Year']) if row['Released_Year'] != -1 else 'NA'

# Skip the row if the year is invalid

if released_year == 'NA':

print(f"Skipping row due to invalid year.")

continue

# Create or merge the Movie node

movie_node = (

f"MERGE (m:Movie {{"

f"title: '{movie_title}', "

f"year: {released_year}, "

f"certificate: '{certificate}', "

f"runtime: '{runtime}', "

f"genre: '{genre}', "

f"imdb_rating: {imdb_rating},"

f"description: '{description}'"

f"}})"

)

# Create or merge the Director node

director_node = f"MERGE (d:Director {{name: '{director_name}'}})"

directed_by_relation = f"MERGE (d)-[:DIRECTED]->(m)"

# Create or merge the Star nodes and their relationships

star_nodes = ""

starred_in_relations = ""

for s, star in enumerate(star_columns, start=1):

star_name = row[star]

if star_name != 'NA':

star_nodes += f"MERGE (s{s}:Star {{name: '{star_name}'}})\n"

starred_in_relations += f"MERGE (s{s})-[:STARRED_IN]->(m)\n"

cypher_query = (

f"{movie_node} {director_node} {directed_by_relation}"

f" {star_nodes.strip()}\n{starred_in_relations.strip()}"

)

# Print the Cypher query

print(cypher_query)

graph.query(cypher_query)

print("Added graph documents to FalkorDB")

In the movie node, title, year, certificate, and other fields are saved as properties. The Director nodes and Star nodes are also created and added as graph edges.

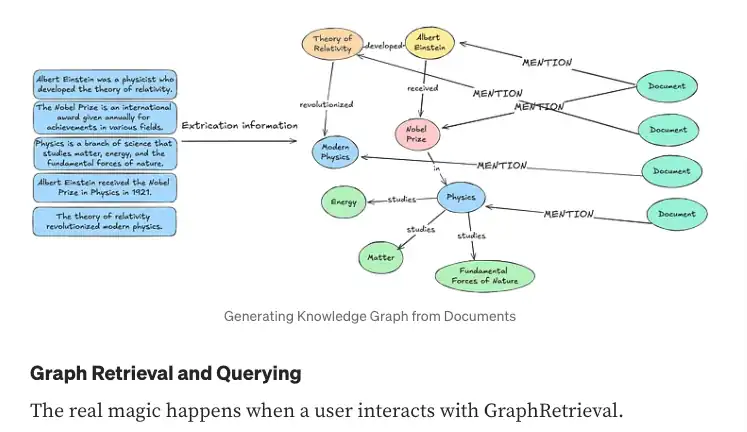

Creating Vector Embeddings

Now that we’ve created the Knowledge Graph, we can index the documents as a vector index. This allows us to handle use cases where similarity search is needed alongside graph exploration.

Here’s how you can do it:

from langchain_community.vectorstores.falkordb_vector import FalkorDBVector

from langchain_core.documents import Document

from falkordb import FalkorDB

from langchain.embeddings import HuggingFaceEmbeddings

db = FalkorDB(host='localhost', port=6379)

embedding_model = HuggingFaceEmbeddings(model_name="sentence-transformers/all-MiniLM-L6-v2")

graph = db.select_graph('imdb')

query = "MATCH (m:Movie) RETURN m.title AS title, m.movie_id AS movie_id, m.description AS description"

result = graph.query(query)

# result

documents = [

Document(

page_content=f"{row[2]}", # row[0] is title, row[2] is description

metadata={"title": row[0], "movie_id": row[1], "description": row[2]} # row[1] is movie_id

)

for row in result.result_set

]

vector_index = FalkorDBVector(

host='localhost',

port=6379,

embedding=embedding_model,

#database='CrCW'

)

vector_index.add_documents(documents)

Above, we’re using the all-MiniLM-L6-v2 embedding model.

Now that we have both graph and vector embedding data stored in FalkorDB, we can move on to assembling the workflow.

Creating GraphState

First, we’ll define a Pydantic class, GraphState, which represents the state that LangGraph will retain throughout the workflow. This state will track the current context, ensuring that follow-up questions remain meaningful.

Here’s what that looks like:

from typing import List, TypedDict

class GraphState(TypedDict):

"""

Represents the state of our graph.

Attributes:

question: question

documents: result of chain

movie_ids: list of id from vector search

prompt: prompt template object

prompt_with_context: prompt template with context from vector search

subqueries: decomposed queries

"""

question: str

documents: dict

movie_ids: List[str]

prompt: object

prompt_with_context: object

subqueries: object

Querying Routing Function

The Router Chain acts as a traffic controller for your queries, determining the best path for processing each question and directing it to the appropriate workflow or database.

For example:

- If the query involves graph relationships, it is sent to FalkorDB.

- If the query requires finding similar items, it uses vector search.

This approach makes the system smarter and more efficient, ensuring that queries are processed in the most optimal way.

from typing import Literal

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.pydantic_v1 import BaseModel, Field

class RouteQuery(BaseModel):

"""Route a user query to the most relevant datasource."""

datasource: Literal["vector search", "graph query"] = Field(

...,

description="Given a user question choose to route it to vectorstore or graphdb.",

)

structured_llm_router = llm.with_structured_output(RouteQuery)

system = """You are an expert at routing a user question to perform vector search or graph query.

The vector store contains documents related to movie titles, summaries, and genres. Here are three routing situations:

If the user question is about similarity search, perform vector search. The user query may include terms like similar, related, relevant, identical, closest, etc., to suggest vector search. For all else, use graph query.

Example questions for Vector Search Case:

Find movies about space exploration

Find similar movies to Interstellar that are related to black holes

Find movies with similar themes to The Matrix

Example questions for Graph QA Chain:

Find movies directed by Christopher Nolan and return their titles

Find movies released in 1997 and return their titles and genres

Find movies of a specific genre, like Action, and return their directors and release years

"""

route_prompt = ChatPromptTemplate.from_messages(

[

("system", system),

("human", "{question}")

]

)

question_router = route_prompt | structured_llm_router

def route_question(state: GraphState):

print("---ROUTE QUESTION---")

question = state["question"]

source = question_router.invoke({"question": question})

if source.datasource == "vector search":

print("---ROUTE QUESTION TO VECTOR SEARCH---")

return "decomposer"

elif source.datasource == "graph query":

print("---ROUTE QUESTION TO GRAPH QA---")

return "graph_qa"

Creating Decomposer Function

Next, we’ll create a function that uses a large language model (LLM) to break down the user query into further subqueries. This is our decomposer function.

Note: To use the Llama 3.3-70B model as the LLM, you’ll need a free-tier API key from Groq.

from langchain_core.pydantic_v1 import BaseModel, Field

from langchain.output_parsers import PydanticToolsParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_groq import ChatGroq

class SubQuery(BaseModel):

"""Decompose a given question/query into sub-queries"""

sub_query: str = Field(

...,

description="A unique paraphrasing of the original questions.",

)

groq_api_key="your_groq_api_key"

llm=ChatGroq(groq_api_key=groq_api_key,model_name="llama-3.3-70b-versatile")

system = """You are an expert at converting user questions into Cypher queries. \

Perform query decomposition. Given a user question, break it down into two distinct subqueries that \

you need to answer in order to answer the original question.

For the given input question, create a query for similarity search and create a query to perform Cypher graph query.

Here is an example:

Question: Find the movies directed by Christopher Nolan and their genres.

Answers:

sub_query1 : Find movies directed by Christopher Nolan.

sub_query2 : Return the genres of the movies.

"""

prompt = ChatPromptTemplate.from_messages(

[

("system", system),

("human", "{question}"),

]

)

llm_with_tools = llm.bind_tools([SubQuery])

parser = PydanticToolsParser(tools=[SubQuery])

query_analyzer = prompt | llm_with_tools | parser

def decomposer(state: GraphState):

'''Returns a dictionary of at least one of the GraphState'''

'''Decompose a given question to sub-queries'''

question = state["question"]

subqueries = query_analyzer.invoke(question)

return {"subqueries": subqueries, "question":question}

Writing Vector Search Function

We can now write the function that will be used to perform the vector similarity search.

from langchain.chains import RetrievalQA

from langchain_groq import ChatGroq

import os

from langchain.embeddings import HuggingFaceEmbeddings

from typing import List

from langchain_core.documents import Document

from langchain.chains import FalkorDBQAChain

from langchain_community.graphs import FalkorDBGraph

def vector_search(state: GraphState):

''' Returns a dictionary of at least one of the GraphState'''

''' Perform a vector similarity search and return movie id as a parsed output'''

question = state["question"]

queries = state["subqueries"]

'''Create a Retrieval QA Chain. Returns top K most relevant movies'''

vector_graph_chain = RetrievalQA.from_chain_type(

llm,

chain_type="stuff",

retriever = vector_index.as_retriever(search_kwargs={'k':3}),

verbose=True,

return_source_documents=True,

)

chain_result = vector_graph_chain.invoke({

"query": question},

)

documents = chain_result["source_documents"]

extracted_data = []

movie_ids = []

for doc in documents:

metadata = doc.metadata

movie_id = metadata.get("id", "Unknown ID") # Adjusted for `movie_id`

description = metadata.get("text", "No Description")

# print(f"Movie ID: {movie_id}, Description: {description}")

extracted_data.append({"movie_id": movie_id, "description": description})

movie_ids.append(("movie_id", movie_id))

return {"movie_ids": movie_ids, "documents": extracted_data, "question":question, "subqueries": queries}

EMBEDDING_MODEL = HuggingFaceEmbeddings(model_name="sentence-transformers/all-MiniLM-L6-v2")

Writing GraphQA Function

We’ll write a function that queries the graph and returns answers in natural language.

LangChain leverages LLMs to generate Cypher queries, retrieve data from the Knowledge Graph, and then use the LLM to generate responses.

For example:

- Question: “What movies did Christopher Nolan direct?”

- Answer: “Cristopher Nolan has directed “The Dark Knght’, ‘Inception’,’The Prestige’ and ‘Interstellar’.”

def graph_qa(state: GraphState):

''' Returns a dictionary of at least one of the GraphState '''

''' Invoke a Graph QA Chain '''

question = state["question"]

"""Create a Graph Cypher QA Chain"""

graph = FalkorDBGraph(database="imdb")

groq_api_key="X"

llm=ChatGroq(groq_api_key=groq_api_key,model_name="llama-3.3-70b-versatile")

# Initialize the QA chain with dangerous request acknowledgment

chain = FalkorDBQAChain.from_llm(

llm,

graph=graph,

verbose=True,

allow_dangerous_requests=True

)

result = chain.run(question)

return {"documents": result, "question":question}

GraphQA With Context

Finally, we’ll write a function that uses the context retrieved from vector search and enhances it with data from the graph.

def graph_qa_with_context(state: GraphState):

'''Returns a dictionary of at least one of the GraphState'''

'''Invoke a Graph QA chain with dynamic prompt template'''

graph = FalkorDBGraph(database="imdb")

queries = state["subqueries"]

question = state["question"]

context=state["documents"]

inputquery=f"""return titles and description of movies with movie_id {state["movie_ids"]}"""

"""Create a Graph Cypher QA Chain. Using this as GraphState so it can access state['prompt']"""

print(context)

graph_qa_chain = FalkorDBQAChain.from_llm(

llm,

graph=graph,

verbose=True,

allow_dangerous_requests=True,

return_intermediate_steps=True,

)

result = graph_qa_chain({"query": inputquery})

return {"documents": f"""{result['intermediate_steps'][1]['context']}""", "subqueries": queries}

LangGraph Workflow

We can now stitch together everything in LangGraph. Here’s how:

from langgraph.graph import END, StateGraph

workflow = StateGraph(GraphState)

# Nodes for graph qa

workflow.add_node("graph_qa", graph_qa)

# Nodes for graph qa with vector search

workflow.add_node("decomposer", decomposer)

workflow.add_node("vector_search", vector_search)

workflow.add_node("graph_qa_with_context", graph_qa_with_context)

workflow.add_node("respond", respond)

# Set conditional entry point for vector search or graph qa

workflow.set_conditional_entry_point(

route_question,

{

'decomposer': "decomposer", # vector search

'graph_qa': "graph_qa" # for graph qa

},

)

# Edges for graph qa with vector search

workflow.add_edge("decomposer", "vector_search")

workflow.add_edge("vector_search", "graph_qa_with_context")

workflow.add_edge("graph_qa_with_context", "respond")

workflow.add_edge("respond", END)

# Edges for graph qa

workflow.add_edge("graph_qa", END)

app = workflow.compile()

LangGraph makes it easy to visualize your workflow. You can generate the workflow diagram as follows:

from IPython.display import Image, display

try:

display(Image(app.get_graph().draw_mermaid_png()))

except Exception:

# This requires some extra dependencies and is optional

pass

This will display the following:

Here’s an explanation of the flow:

- If the question is routed to decomposer:

- The flow moves from decomposer to vector_search for a similarity-based search.

- After vector_search, it moves to graph_qa_with_context for further refinement and context-aware querying.

- Finally, the flow reaches respond, where the response is generated.

- If the question is routed to graph_qa:

- The flow directly reaches graph_qa, where the question is answered using the graph database.

- After graph_qa, the flow moves to end, where the workflow terminates.

Results

We can now test our workflow. This can be done in the following manner:

graph_qa_result = app.invoke({"question": "Which movies did Christopher Nolan direct?"})

### results:

MATCH (d:Director {name: 'Christopher Nolan'})-[:DIRECTED]->(m:Movie) RETURN m.title, m.year

Full Context:

[['The Dark Knight', 2008], ['Inception', 2010], ['Interstellar', 2014], ['The Prestige', 2006], ['The Dark Knight Rises', 2012], ['Memento', 2000], ['Batman Begins', 2005], ['Dunkirk', 2017]]

Summary

In this article, we explored how FalkorDB can be used to build a GraphRAG workflow with LangChain and LangGraph. By combining graph queries, semantic retrieval, and hybrid searches, FalkorDB offers a powerful way to manage and explore connected data.

Ready to get started? Install FalkorDB locally, sign up for FalkorDB Cloud, or dive deeper into its integration with LangChain.