Highlights

- NoSQL databases excel in high-velocity environments with distributed architectures that support horizontal scaling, fault tolerance, and schema flexibility—critical for AI workloads and real-time analytics.

- Graph databases like FalkorDB combine NoSQL's schema flexibility with relationship-first architecture, enabling complex traversals without performance bottlenecks in AI-driven applications.

- NoSQL implementations require strategic data partitioning, optimized query processing, and distributed computing frameworks to achieve sub-millisecond performance at scale.

Modern applications generate vast amounts of data—structured, semi-structured, and unstructured—from AI models, social media, financial transactions, and real-time analytics. If you’re relying on traditional relational databases, you’ve likely faced their biggest limitation: rigid schemas. Schema changes and data migrations can be a nightmare, and as your data scales, join operations become costly, slowing down performance and limiting scalability.

That’s where NoSQL databases come in. With schema flexibility, distributed storage, and partitioned processing across multiple nodes, NoSQL databases let you handle real-time analytics, AI-powered recommendations, and high-volume data ingestion—without compromising performance or fault tolerance.

In this article, we’ll explore different types of NoSQL databases, their architectures, and real-world implementation scenarios. We’ll also dive into key technical requirements for distributed systems, including performance considerations, and demonstrate how FalkorDB powers AI-driven applications with next-level scalability.

What Are NoSQL Databases?

NoSQL databases are powerful, non-relational storage systems designed to handle massive volumes of structured, semi-structured, and unstructured data with speed and scalability. Unlike traditional relational databases that rely on rigid schemas and SQL constraints, NoSQL databases offer flexible models optimized for distributed processing and real-time workloads.

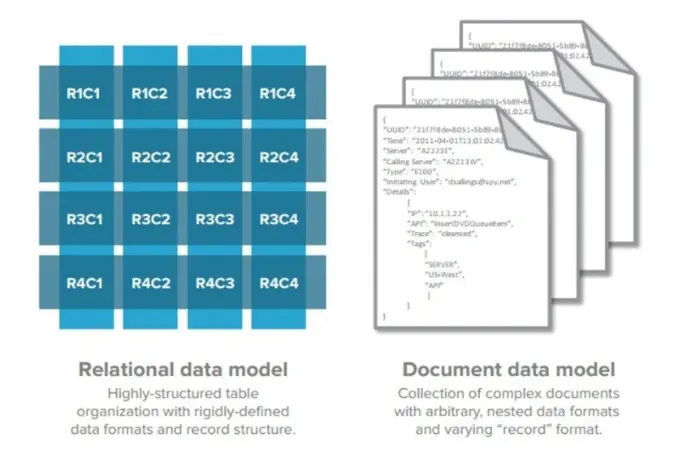

Data Modeling Differences: NoSQL vs SQL

The data modeling approaches of NoSQL and SQL databases reveal fundamental differences in structure and flexibility.

Flexibility and Development Approach:

- NoSQL databases support an ‘application-first’ or API-first development pattern. This means developers focus initially on queries needed for specific application functionalities, rather than predefined data models. This approach aligns closely with agile and DevOps practices, allowing for swift adjustments to data models as applications evolve.

Structured vs. Unstructured Models:

- Traditional SQL databases impose a rigid, schema-based structure. Tables, consisting of columns and rows, are designed to create clear, enforceable relationships among entities. Each table defines an entity, and relationships are managed through schemas and database rules, ensuring data uniformity and consistency.

Control and Management:

- In SQL environments, database administrators typically manage schemas, which can lead to a more centralized control over data models but may introduce delays in development cycles. In contrast, NoSQL databases decentralize control, empowering developers to independently adapt data structures, accelerating development and enhancing agility.

Error-Prone Flexibility:

- While NoSQL’s flexible model allows multiple ‘shapes’ of data objects to coexist, this flexibility can sometimes lead to errors and inconsistencies if not managed carefully.

Using decentralized architectures, horizontal scaling, and eventual consistency, NoSQL databases overcome the limitations of relational databases in managing high-velocity, large-scale data. This makes them the go-to choice for big data applications, AI-driven analytics, and real-time processing at scale.

Key Characteristics of NoSQL Databases

Companies like Netflix and Amazon rely on NoSQL databases to power recommendation engines, fraud detection systems, and high-throughput web applications. These databases are essential for applications requiring low latency, high-speed transactions, and scalable storage solutions.

Below are the key characteristics of NoSQL databases:

- Schema Flexibility: No predefined schema; data structures can evolve dynamically.

- Horizontal Scalability: Designed to distribute data across multiple servers for better performance.

- High Availability & Partition Tolerance: Supports distributed architectures, ensuring minimal downtime.

- Optimized for Big Data & Real-time Applications: Handles high read/write loads efficiently.

- Diverse Data Models: Supports document-based, key-value, column-family, and graph-based storage.

Data Challenges Driving NoSQL Adoption

Modern applications generate massive amounts of fast, diverse data from production systems. As your data grows rapidly, traditional relational databases can struggle with scalability, schema rigidity, and high latency—leading to performance bottlenecks that slow down your applications.

Below are some of the potential challenges that relational databases face:

- Scalability Issues: Sharding and partitioning relational data can quickly become complex. Once your data outgrows a single database instance, scaling becomes a challenge, requiring intricate data distribution strategies.

- Schema Rigidity: Relational databases rely on predefined schemas, making schema changes costly and time-consuming. If your application evolves dynamically, frequent data migrations can slow down development and introduce downtime.

- High-Cost Joins and Query Performance: SQL databases rely heavily on join operations, which become increasingly expensive as data scales. Take an e-commerce platform, for example—retrieving customer orders, product details, and shipment history often requires multiple joins across massive tables. As order volumes grow, these queries become computationally heavy, slowing performance and increasing infrastructure costs.

- Real-Time Data Processing Limitations: Many relational databases aren’t built for high-throughput, low-latency applications like AI, IoT, or real-time financial transactions. If your system demands real-time analytics, relational architectures can struggle to keep up.

- Sharding and Replication Complexity: Scaling SQL databases across multiple nodes requires manual sharding while maintaining referential integrity—this adds significant complexity to your queries. Replication helps improve availability but can lead to write-heavy workloads, lag, and consistency challenges.

NoSQL databases are designed to overcome these limitations. They offer horizontal scaling, flexible schemas, and distributed data management, ensuring high availability, low latency, and real-time performance—so your applications can scale effortlessly without sacrificing speed.

Technical Requirements for Modern NoSQL Data Architecture

A modern NoSQL database is built to handle large-scale, high-velocity data streams with ease—scaling effortlessly while staying highly available and fault-tolerant. Unlike traditional relational databases that rely on centralized structures, NoSQL systems embrace a distributed approach, spreading data across multiple nodes for lightning-fast storage, retrieval, and parallel processing.

In this section, we’ll dive into the core attributes that make NoSQL databases scalable and highly efficient.

Storage Engine

The storage engine manages data storage, indexing, and retrieval in NoSQL databases. LSM-Trees optimize write-heavy operations by batching writes and merging them efficiently. B-Trees improve read performance by organizing data for fast lookups. Columnar storage enhances analytical queries by grouping related data in columns, making large-scale data analysis more efficient.

Data Model & Schema Flexibility

NoSQL databases offer schema flexibility, allowing your data structures to evolve dynamically as your application grows. Document stores, a type of NoSQL database, use JSON/BSON formats to store data, giving you the freedom to structure and modify it as needed, though you’ll need to handle relationship queries yourself. Graph databases, another form of NoSQL, also support JSON storage while excelling at modeling relationships between data points, making complex queries faster and more efficient.

Distributed Storage & Data Partitioning

NoSQL databases distribute data across multiple nodes, giving you scalability and fault tolerance right out of the box. With this setup, you can scale horizontally, balance workloads efficiently, and maintain high performance as your data grows. Many NoSQL databases also offer replication, keeping multiple nodes in sync to ensure availability even during failures. Plus, you get the flexibility to choose from different consistency models, letting you trade off between strong consistency and performance based on your application’s needs.

Query Processing & Indexing

NoSQL databases use secondary indexing, inverted indexing, and materialized views to optimize queries. Secondary indexing speeds up lookups, reducing query execution time. Inverted indexing enhances full-text search, improving retrieval efficiency. Materialized views precompute query results, reducing processing overhead.

Distributed Computing & Execution Layer

NoSQL databases seamlessly integrate with distributed computing frameworks, giving you the power to handle large-scale data processing with ease. With batch processing, you can efficiently manage massive datasets, ensuring smooth and optimized performance. Streaming engines process real-time data, supporting event-driven applications. Parallel query execution distributes workloads, reducing latency in high-performance environments.

Transaction Management & Concurrency Control

NoSQL databases offer alternative transaction models, giving you the flexibility to balance performance and consistency based on your needs. With multi-version concurrency control (MVCC), you can prevent conflicts and allow simultaneous reads and writes without blocking operations. Optimistic and pessimistic locking manage concurrent access, reducing data inconsistencies. Lightweight transactions provide atomic operations, ensuring data integrity without performance loss.

Security & Access Control

NoSQL databases come with robust security features to help you protect sensitive data. With Role-based Access Control (RBAC), you can control access and prevent unauthorized modifications to your data. Encryption ensures the data stays secure both in transit and at rest, safeguarding it from potential breaches. Plus, with logging and auditing, you can monitor database activity and maintain compliance with security regulations, offering full visibility and control over the system’s security.

High Availability & Fault Tolerance

NoSQL databases keep the applications running smoothly with continuous availability powered by distributed architectures. If a node fails, automatic failover detects the issue and redirects traffic to healthy nodes, ensuring minimal disruption. Replication spreads the data across multiple nodes, reducing the risk of data loss and keeping your system resilient. Plus, with self-healing clusters, failed nodes are automatically restored, so you can maintain reliability with little to no downtime.

NoSQL Database Types

NoSQL databases come in different types, each optimized for specific data structures and workloads. Below, we’ll discuss four types of NoSQL databases that are widely used: Document Stores, Graph Databases, Key-Value Stores, and Column-Family Stores (or Columnar Databases).

Document stores or document databases store data in JSON or BSON formats. They support flexible schemas, allowing you to store data where the schema may evolve. Unlike relational databases, you can use them to store nested JSON objects, arrays, or key-value pairs.

Document stores offer rich indexing and complex JSON queries, and allow you to scale horizontally through sharding. These features make document databases ideal for a wide range of applications where data can be represented as JSON-structured documents, and you need high throughput.

However, there is a downside. With pure document stores, it can be a struggle to model complex relationships. Unlike relational databases, where you can explicitly model relationships using foreign keys, use join queries, and enforce referential integrity, NoSQL databases often require manual handling of relationships. This can lead to data duplication and increased query complexity when linking related records.

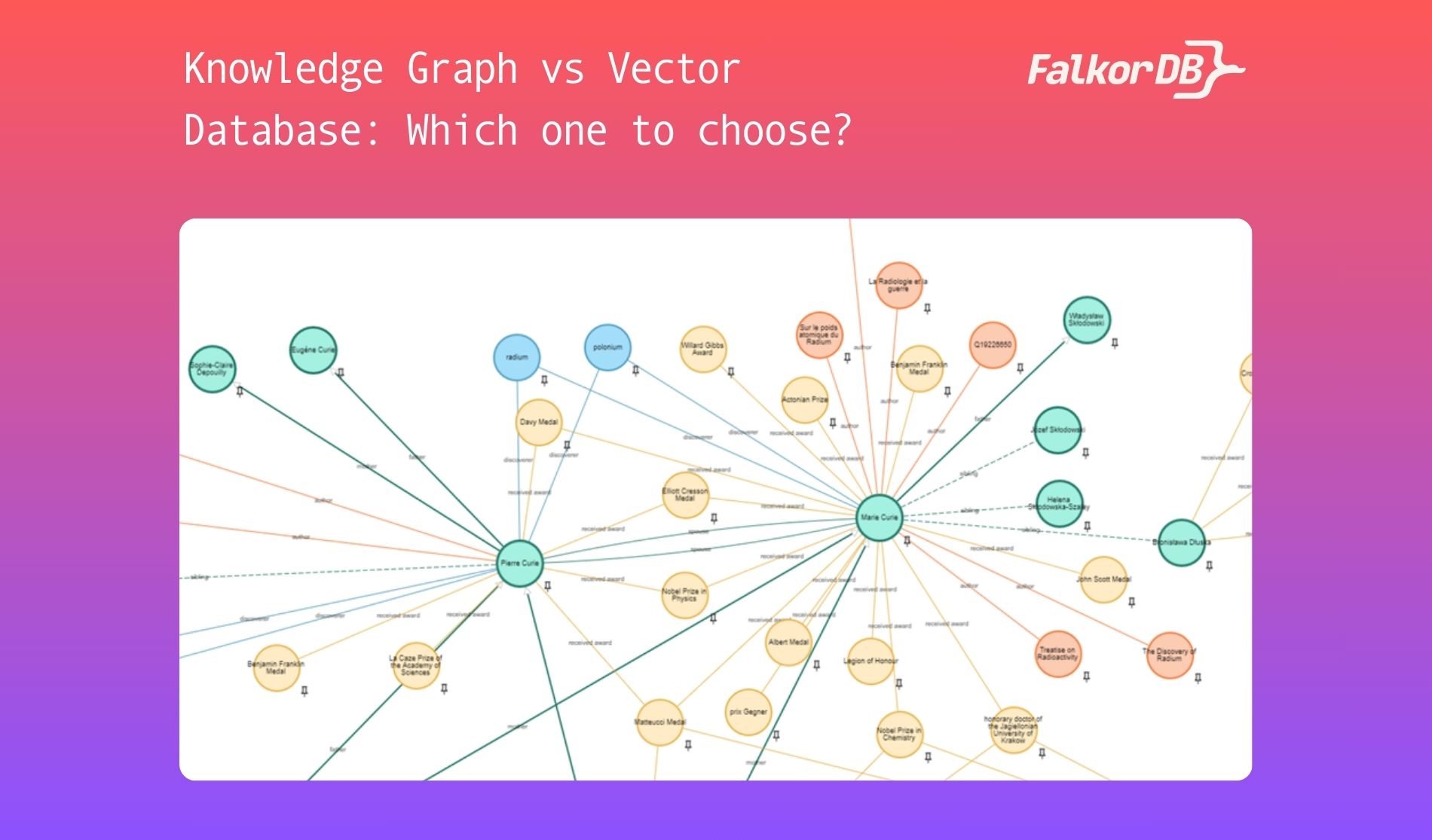

Graph Databases: Native Relationship Processing

Graph databases are specialized NoSQL databases where you model data as nodes and edges. Nodes represent entities, edges represent relationships, and you associate labels and properties to each. Properties can be used to store data in JSON format.

Graph databases allow for flexible schema, while also enabling you to model relationships between data using edges. This helps with relationship-based queries. Since graph databases store relationships as first-class objects, they help with fast traversal through connections and complex queries with minimal overhead. Simultaneously, you can leverage their properties to store deep nested JSON data.

Graph databases, therefore, combine the strengths of both SQL and NoSQL systems. Like SQL databases, they provide structured knowledge modeling through Cypher, a powerful query language designed for expressing complex relationships. At the same time, they function like NoSQL databases by associating key-value properties with nodes and edges, allowing flexible, schema-free data storage. This makes graph databases a unique NoSQL category that not only scales efficiently but also structures data intuitively for AI, recommendation engines, and network analysis.

Key-Value Stores: High Throughput Data Access

Key-value stores manage data as pairs, where each unique key is linked to a value that can be a simple type or a complex object like JSON. This simple setup reduces processing overhead, resulting in very fast data retrieval.

These databases are designed to scale out horizontally. Techniques such as consistent hashing help distribute data evenly across multiple nodes, which boosts performance and ensures fault tolerance. Many key-value systems combine in-memory storage for sub-millisecond access with persistent storage for data durability and safety.

Key-value stores also support features like replication and sharding to improve availability and enable atomic operations, ensuring safe, concurrent data modifications in distributed environments.

Key-value stores offer the speed and scalability needed for modern high-performance applications. They are widely used in caching, session management, real-time analytics, and microservices architectures.

Column-Family Stores: Wide-Column Data Organization

Column-family stores help optimize data retrieval by grouping related columns together instead of storing all columns of a row in a single block. This means your system only reads the columns you actually need, reducing input/output work and speeding up analytical queries.

To keep your system highly available, column-family stores replicate data across multiple nodes, ensuring that if one node fails, the data remains accessible. They use a log-structured storage approach, where write operations are batched and stored sequentially. This reduces random disk access and significantly improves performance for heavy write workloads.

With wide-row support, you can store large numbers of columns efficiently while benefiting from column-level compression and fast column scans. These features make column-family stores a great choice for real-time analytics, event logging, and large-scale big data applications.

NoSQL Implementation Scenarios

NoSQL databases are built for scalability, flexibility, and high-performance data handling in modern applications. Let’s look at the scenarios where NoSQL databases are typically used:

High-Velocity Data Processing Systems

If you’re dealing with real-time, high-speed data streams—like financial transactions, social media feeds, or IoT telemetry—traditional relational databases will slow you down. They struggle with write-heavy operations and strict consistency, creating bottlenecks that impact performance.

NoSQL databases use sharding, distributed storage, and event-driven processing to handle high-speed data. This makes the system suitable for Online Analytical Processing (OLAP) workloads. They enable append-only writes, parallel execution, and eventual consistency, ensuring low-latency ingestion and real-time analytics. NoSQL is ideal for log processing, telemetry data, and high-frequency trading platforms.

Complex Relationship Mapping

If your application relies on relationships—think fraud detection, recommendation engines, or knowledge graphs—relational databases can be a nightmare. Joins across massive tables make queries painfully slow and inefficient.

NoSQL graph databases treat relationships as first-class entities, making it easy to traverse data quickly, leverage distributed indexing, and execute complex queries efficiently. Unlike relational databases that slow down with costly joins, graph databases are built to handle deeply connected data at scale.

Following the BASE (Basically Available, Soft state, Eventually consistent) model, NoSQL databases ensure high scalability and resilience in distributed environments. This makes them ideal for anomaly detection, AI-driven insights, and relationship-based search, all while minimizing query overhead. You’ll find NoSQL graph databases powering social networks, cybersecurity analytics, fraud detection, and real-time AI-driven decision-making systems—where fast, scalable relationship mapping is critical.

Large-Scale Distributed Systems

Running a global application? You need high availability, fault tolerance, and multi-region replication—things relational databases struggle with due to their centralized architectures.

NoSQL databases scale horizontally, distribute data across multiple nodes, and support multi-replica storage. With leaderless consensus, automatic failover, and self-healing clusters, they maintain 99.99% uptime under heavy workloads. NoSQL is critical for cloud-native applications, e-commerce platforms, and global content delivery networks.

How FalkorDB Optimizes NoSQL Database Performance

FalkorDB is a modern, distributed NoSQL graph database built for high-performance applications requiring ultra-low latency, real-time AI, and scalable knowledge graph management.

- Distributed Architecture: Provides fault tolerance and horizontal scalability.

- Multi-Model Support: Handles various data types, including document, key-value, and graph.

- Optimized Performance: Ensures high throughput with low latency for real-time operations.

- Enterprise-Grade Features: Offers built-in replication, backup, and robust security.

FalkorDB is built to enhance AI workloads, combining graph-based data modeling, distributed query execution, and in-memory processing for lightning-fast performance. Its native graph architecture makes traversing complex relationships effortless, while automatic sharding and parallel query execution ensure ultra-low-latency retrieval across distributed nodes.

FalkorDB delivers a scalable, AI-ready NoSQL solution designed for high-performance workloads—so you can build faster, smarter AI applications without hitting database bottlenecks.

Building a Distributed NoSQL Database System with FalkorDB SDK

To demonstrate why FalkorDB is a step up over traditional NoSQL databases, we’ll show you how to insert JSON structured data as well as create relationships between data points. We’ll then show how to query and find objects based on properties, or query using relationships.

1. Set Up FalkorDB Using Docker

To get started with FalkorDB quickly, you can run an instance using Docker. Here’s how:

docker run -p 6379:6379 -p 3000:3000 -it --rm -v ./data:/data falkordb/falkordb:edge

After launching, navigate to http://localhost:3000 in your browser to launch the FalkorDB Browser and interact with the database.

2. Install FalkorDB Client

FalkorDB has a Python client that allows you to interact with the database. Here’s how you install it.

pip install falkordb

3. Connect to FalkorDB Using Python

Since FalkorDB follows a schema-less approach, we can store dynamic and flexible data structures without rigid constraints.

Before we start with the code, let’s connect to FalkorDB using Python client:

from falkordb import FalkorDB

db = FalkorDB(host='localhost', port=6379)

graph = db.select_graph('graph')

4. Insert Sample Data

Now, we can insert sample data into the system by creating nodes (representing entities) and edges (defining the relationships between them). Each node will include rich JSON-structured metadata, capturing attributes like personal details, professional background, skills, and affiliations. These structured data points will enable efficient querying and relationship traversal within the graph database.

# Creating Nodes with Metadata

graph.query("""

CREATE (:Person {

name: 'Alice', age: 30, gender: 'Female', city: 'New York', job: 'Engineer',

company: 'TechCorp', email: 'alice@example.com', phone: '123-456-7890',

linkedin: 'linkedin.com/alice', twitter: '@alice_tech',

hobbies: ['reading', 'hiking', 'photography'], languages: ['English', 'Spanish'],

skills: ['Python', 'Machine Learning', 'Data Science'], experience: 8,

certifications: ['AWS Certified', 'PMP'], education: 'MIT',

marital_status: 'Single', nationality: 'American', height: 5.6, weight: 140

})

""")

graph.query("""

CREATE (:Person {

name: 'Bob', age: 25, gender: 'Male', city: 'San Francisco', job: 'Designer',

company: 'CreativeWorks', email: 'bob@example.com', phone: '987-654-3210',

linkedin: 'linkedin.com/bob', twitter: '@bob_creative',

hobbies: ['drawing', 'traveling', 'gaming'], languages: ['English', 'French'],

skills: ['Photoshop', 'Illustrator', 'UI/UX'], experience: 5,

certifications: ['Adobe Certified', 'UX Master'], education: 'Stanford',

marital_status: 'Married', nationality: 'Canadian', height: 5.9, weight: 160

})

""")

graph.query("""

CREATE (:Company {

name: 'TechCorp', industry: 'Technology', location: 'New York',

employees: 5000, revenue: '5B', ceo: 'John Doe',

founded: 2005, website: 'www.techcorp.com', stock_symbol: 'TCORP',

headquarters: 'NYC', services: ['Cloud Computing', 'AI', 'Cybersecurity'],

clients: ['Company A', 'Company B'], partners: ['Google', 'Microsoft'],

annual_growth: 10, funding_rounds: 5, acquired_companies: ['StartupX'],

sustainability_rating: 'A', twitter: '@TechCorp', linkedin: 'linkedin.com/techcorp'

})

""")

graph.query("""

CREATE (:Project {

name: 'AI Research', budget: 2000000, duration: '2 years',

team_size: 20, technologies: ['Python', 'TensorFlow', 'Kubernetes'],

start_date: '2024-01-01', end_date: '2025-12-31', sponsor: 'TechCorp',

lead: 'Alice', progress: '50%', risks: ['Data Security', 'Scalability'],

deliverables: ['Model Prototype', 'Research Paper'], regulatory_approvals: True,

ethical_considerations: ['Bias Mitigation', 'Fairness'],

partners: ['University X', 'OpenAI'], funding_source: 'Venture Capital'

})

""")

graph.query("""

CREATE (:Event {

name: 'Tech Summit 2025', location: 'San Francisco',

date: '2025-06-15', duration: '3 days', attendees: 5000,

speakers: ['Alice', 'Bob', 'Elon Musk'], sponsors: ['TechCorp', 'Tesla'],

topics: ['AI', 'Blockchain', 'Cybersecurity'], ticket_price: 499,

venue: 'SF Convention Center', online_stream: true,

twitter: '@TechSummit', linkedin: 'linkedin.com/techsummit',

partners: ['Google', 'AWS'], previous_years_attendance: [4000, 4500, 4700],

media_coverage: ['TechCrunch', 'Wired']

})

""")

# Creating Relationships

graph.query("""

MATCH (a:Person {name: 'Alice'}), (b:Person {name: 'Bob'})

CREATE (a)-[:FRIENDS_WITH]->(b)

""")

graph.query("""

MATCH (p:Person {name: 'Alice'}), (c:Company {name: 'TechCorp'})

CREATE (p)-[:WORKS_AT]->(c)

""")

graph.query("""

MATCH (p:Person {name: 'Bob'}), (c:Company {name: 'CreativeWorks'})

CREATE (p)-[:WORKS_AT]->(c)

""")

graph.query("""

MATCH (p:Person {name: 'Alice'}), (pr:Project {name: 'AI Research'})

CREATE (p)-[:LEADS]->(pr)

""")

graph.query("""

MATCH (p:Person {name: 'Bob'}), (e:Event {name: 'Tech Summit 2025'})

CREATE (p)-[:SPEAKER_AT]->(e)

""")

5. NoSQL Querying

FalkorDB allows you to query data efficiently using its property-based querying model. Whether you’re searching for specific attributes, filtering by conditions, or traversing relationships, the database delivers high-performance query execution.

Here’s how you can structure different types of queries:

String-Based Match

You can retrieve a node by matching an exact property value. This method is effective for direct lookups, such as identifying a person by name.

res = graph.query("""

MATCH (p:Person {name: 'Alice'})

RETURN p

""")

for row in res.result_set:

print(row[0])

The result:

(:Person{age:30,certifications:['AWS Certified', 'PMP'],city:"New York",company:"TechCorp",education:"MIT",email:"alice@example.com",experience:8,gender:"Female",height:5.6,hobbies:['reading', 'hiking', 'photography'],job:"Engineer",languages:['English', 'Spanish'],linkedin:"linkedin.com/alice",marital_status:"Single",name:"Alice",nationality:"American",phone:"123-456-7890",skills:['Python', 'Machine Learning', 'Data Science'],twitter:"@alice_tech",weight:140})

Range-Based Match

You can filter data using numerical comparisons. This is useful for retrieving records that meet a specific condition, such as finding all individuals above a certain age.

res = graph.query("""

MATCH (p:Person)

WHERE p.age > 28

RETURN p.name, p.age

""")

for row in res.result_set:

print(f"Name: {row[0]}, Age: {row[1]}")

The result:

Name: Alice, Age: 30

Array/List-Based Match

You can check if a specific value exists within an array or list property. This approach is effective for filtering nodes based on attributes like skills, interests, or certifications.

res = graph.query("""

MATCH (p:Person)

WHERE 'Python' IN p.skills

RETURN p.name, p.skills

""")

for row in res.result_set:

print(f"Name: {row[0]}, Skills: {row[1]}")

The result:

Name: Alice, Skills: ['Python', 'Machine Learning', 'Data Science']

Query by Event Name

You can fetch event details by matching attributes such as name, location, or date. This method helps retrieve structured event data, including attendees, sponsors, and media coverage.

# Example: Query Event by Name

res = graph.query("""

MATCH (e:Event {name: 'Tech Summit 2025'})

RETURN e

""")

for row in res.result_set:

print(row[0])

The result:

(:Event{attendees:5000,date:"2025-06-15",duration:"3 days",linkedin:"linkedin.com/techsummit",location:"San Francisco",media_coverage:['TechCrunch', 'Wired'],name:"Tech Summit 2025",online_stream:True,partners:['Google', 'AWS'],previous_years_attendance:[4000, 4500, 4700],speakers:['Alice', 'Bob', 'Elon Musk'],sponsors:['TechCorp', 'Tesla'],ticket_price:499,topics:['AI', 'Blockchain', 'Cybersecurity'],twitter:"@TechSummit",venue:"SF Convention Center"})

Query Using Relationships

You can retrieve insights by querying relationships between nodes in FalkorDB. This is where FalkorDB stands out over traditional NoSQL databases.

The ability to combine graph queries with property queries allows you to create sophisticated and accurate queries around data, and use that as context to the LLM for nuanced responses. Let’s look at some examples of this.

The following query finds companies that sponsor events by matching nodes linked through the SPONSORS relationship. It returns the company name along with the event it supports.

# Find Companies Sponsoring Events

res = graph.query("""

MATCH (c:Company)-[:SPONSORS]->(e:Event)

RETURN c.name, e.name

""")

for row in res.result_set:

print(f"Sponsor: {row[0]}, Event: {row[1]}")

The result:

Sponsor: TechCorp, Event: Tech Summit 2025

Sponsor: TechCorp, Event: Tech Summit 2025

Query Using Relationships and Properties

You can traverse relationships between nodes to extract detailed insights. For example, the following query retrieves details of employees working in a specific company, along with their skills, certifications, experience, and any projects they lead.

res = graph.query("""

MATCH (p:Person)-[:WORKS_AT]->(c:Company {name: 'TechCorp'})

OPTIONAL MATCH (p)-[:LEADS]->(pr:Project)

RETURN p.name, p.skills, p.experience, p.certifications, pr.name

""")

for row in res.result_set:

print(f"Employee: {row[0]}, Skills: {row[1]}, Experience: {row[2]} years, Certifications: {row[3]}, Project: {row[4]}")

The result:

Employee: Alice, Skills: ['Python', 'Machine Learning', 'Data Science'], Experience: 8 years, Certifications: ['AWS Certified', 'PMP'], Project: AI Research

Creating a Cluster for Scalability

As your data volume expands and query loads surge, scaling efficiently becomes crucial. That’s where the FalkorDB cluster steps in. By distributing data across multiple nodes, it accelerates performance with parallel processing and slashes query latency.

FalkorDB’s built-in auto-sharding dynamically balances workloads, preventing bottlenecks as demand grows. Plus, its replication and fault-tolerant architecture keep your system highly available—even under heavy traffic—so your applications scale seamlessly without a hitch.

Now, let’s dive into the steps to set up a FalkorDB cluster.

Step 1. Create a Network for the Cluster

A dedicated Docker network enables communication between cluster nodes:

docker network create falkor-network

Step 2. Start the First FalkorDB Node (Cluster Mode)

Launch the first node and configure it for clustering:

docker run -d --name falkordb-node1 --net falkor-network -p 6379:6379 falkordb/falkordb --cluster-enabled yes --cluster-config-file nodes.conf --cluster-node-timeout 5000

The parameter cluster-enabled enables FalkorDB to run in cluster mode. You can set node time communication and also define the cluster metadata.

Step 3. Start the Second FalkorDB Node (Cluster Mode)

Let’s now launch an additional node and connect it to the same network:

docker run -d --name falkordb-node2 --net falkor-network -p 6380:6379 falkordb/falkordb --cluster-enabled yes --cluster-config-file nodes.conf --cluster-node-timeout 5000

Step 5: Get Node IP Addresses

Retrieve the internal IP addresses of each node inside the Docker network:

docker inspect falkordb-node1 | findstr "IPAddress"

docker inspect falkordb-node2 | findstr "IPAddress"

This outputs the IP addresses assigned to each node, which will be used in the next step.

Step 6: Connect Second Node to First Node

Now join the second node to the cluster. First, access the CLI of the first node:

docker exec -it falkordb-node1 redis-cli

Inside the Redis CLI, use the CLUSTER MEET command to connect the second node (replace <Node2-IP> with its actual IP address):

CLUSTER MEET <Node2-IP> 6379

Step 7: Verify the Cluster

Confirm the cluster is set up correctly by running the following inside the first node’s Redis CLI:

Run this inside falkordb-node1:

CLUSTER INFO

If the setup is successful, you’ll see:

cluster_state:ok

Step 8: List All Nodes in the Cluster

To check all connected nodes, run:

CLUSTER NODES

This will display details of all the nodes in the cluster, confirming that they are successfully connected and operational.

Scaling the Cluster Dynamically

As your system demands grow, scaling your cluster dynamically is essential. With FalkorDB, you can add new nodes on the fly—without any downtime. Its intelligent rebalancing algorithm ensures that data is evenly distributed across all nodes, keeping performance smooth and efficient.

Elastic Capacity

In a NoSQL cluster, nodes are easy to add and remove according to demand, providing what is known as ‘elastic’ capacity. This flexibility allows organizations to align their data footprint with business needs while maintaining availability during seasonal demand spikes, node failures, and network outages.

When a new node joins, FalkorDB’s shard placement mechanism automatically migrates data partitions, preventing any load imbalances. You also have the flexibility to fine-tune key performance parameters, such as:

- THREAD_COUNT – Allocate CPU resources efficiently to match your workload.

- CACHE_SIZE – Optimize memory usage for frequently accessed queries.

Ensuring High Availability and Fault Tolerance

FalkorDB is built to keep your system running, no matter what. Automatic failover and redundancy ensure uninterrupted operations, even if a node goes down. When that happens, a replica is instantly promoted, keeping everything seamless.

Replication keeps data consistent across nodes, while the persistence engine periodically saves snapshots to prevent data loss. If hardware fails, FalkorDB automatically shifts workloads to healthy nodes—no manual intervention needed.

FalkorDB uses asynchronous replication for even better performance, batching updates before synchronization. This reduces latency, speeds up write operations, and ensures efficient data distribution.

Data Replication in NoSQL Databases

NoSQL databases use various models to handle data replication effectively, each with unique strengths and potential drawbacks. Here’s a breakdown of these replication models:

Multi-Master Model: Some databases utilize a multi-master approach, allowing multiple nodes to accept write operations simultaneously. This model enhances write availability but introduces complexity in conflict resolution.

Master-Slave Architecture: This model designates one node as the master to handle all writes, while slave nodes replicate the data. Although simpler in design, it presents a single point of failure during master node outages, necessitating a new master election that could cause brief downtime.

Masterless Architecture: In contrast, a masterless model, as seen in some NoSQL databases, provides equal responsibility across nodes. This approach eliminates single points of failure, as no individual node controls the cluster. Typically, data is replicated across three or more nodes, ensuring resilience and high availability.

Using these replication strategies, NoSQL databases can maintain data consistency and availability even under high-volume, low-latency conditions.