Highlights

- Optimize GraphRAG indexing by leveraging composite indexes and caching to reduce latency and costs.

- Use cardinality reduction and property access patterns to streamline complex graph queries in large datasets.

- Integrate LLMs effectively with graph databases for scalable, cost-efficient retrieval-augmented generation workflows.

In today’s digital economy, data is the new oil, driving innovation and transformation across industries. But here’s the catch: as the article by MIT Sloan School of Management points out, a staggering 80% of the world’s data is unstructured.

Think about all the PDFs, text files, videos, audio clips, server logs, social media chatter, customer reviews, and support queries floating around. That’s a goldmine of insights, waiting to be tapped.

Now, here’s the exciting part: Large Language Models (LLMs) and Vision Language Models (VLMs) are evolving rapidly, and they can help you unlock the hidden potential of this messy, unstructured data. But it’s important to remember that LLMs come with token limitations. They can’t process massive volumes of data in one go. That’s where retrieval systems like GraphRAG come in.

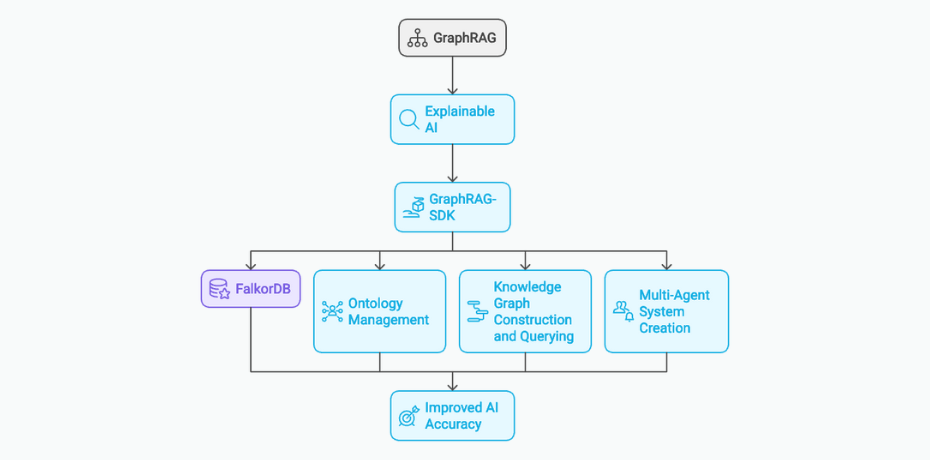

This synergy between LLMs and graph databases has led to the rise of the GraphRAG (Graph-powered Retrieval-Augmented Generation) architecture—a much-needed solution for anyone dealing with unstructured data.

A typical GraphRAG system has three essential steps

- Ingestion: First, you take all that unstructured data and transform it into a knowledge graph, inserting it into a graph database. During this step, an index is created, making future queries lightning-fast.

- Retrieval: Next, when you need insights, you query the knowledge graph to fetch the most relevant nodes and relationships. This step provides a rich context that helps the system understand your data better.

- Generation: Finally, you feed that retrieved context into your LLM or VLM. The result? Accurate, insightful, and explainable outputs that add real value.

When the knowledge graph is small, GraphRAG systems work smoothly, right out of the box. But as your data grows, things can get tricky. Without the right optimizations, ingestion, indexing, and querying can become inefficient and expensive—something no one wants.

In this article, I will discuss strategies to enhance your graph database management. We’ll explore techniques that streamline all the key steps and provide you with pointers on how to make your GraphRAG system more efficient, as well as reducing GraphRAG indexing costs.

When the knowledge graph is small, GraphRAG systems work smoothly, right out of the box. But as your data grows, things can get tricky. Without the right optimizations, ingestion, indexing, and querying can become inefficient and expensive—something no one wants.

In this article, we’ll explore powerful strategies to supercharge your graph database management. We’ll explore techniques that streamline all the key steps and provide you with pointers on how to make your GraphRAG system more efficient.

Understanding Graph Indexing Costs

While graph indexes are essential for performance, they come with significant costs that impact both efficiency and resource utilization. As datasets grow, the creation and maintenance of indexes become increasingly expensive, demanding substantial memory and processing power.

These costs stem from multiple factors:

Structural Complexity of Graph Databases

Graph databases represent data as interconnected nodes and edges, which fundamentally differs from traditional relational database structures. This complexity makes indexing significantly more challenging compared to simple tabular data. Unlike row-based or column-based indexes in relational databases, graph database indexes should be designed to efficiently navigate network relationships, traversing multiple connections while maintaining acceptable query performance.

Memory and Computational Overhead

Index creation and maintenance in graph databases consume substantial computational resources. As the graph grows, the number of potential paths and connections increases exponentially, leading to massive memory requirements. This exponential growth means that naive and inefficient indexing strategies can quickly become memory-intensive and computationally expensive.

Data Volume and Scaling Challenges

Modern graph datasets are experiencing unprecedented growth, driven by complex interconnected systems like social networks, recommendation engines, and advanced analytics. As data volumes expand, the cost of creating and maintaining comprehensive indexes becomes increasingly prohibitive. Traditional indexing approaches break down when dealing with massive, densely connected graphs, requiring more sophisticated indexing algorithms.

Frequent Update Operations

Graph databases often operate in dynamic environments where data relationships constantly evolve. Each update – adding nodes, modifying edges, or changing attributes – can potentially invalidate existing indexes. This constant state of flux necessitates highly adaptive indexing mechanisms that can efficiently update and rebalance without incurring significant performance penalties.

Given these challenges, let’s explore some practical strategies you can adopt to reduce graph indexing costs.

Optimizing Query Performance

Let’s start by focusing on improving query performance, a crucial factor that directly impacts both latency and throughput. For any GraphRAG system to be practical and user-friendly, low-latency operations are essential. Even with large-scale datasets, your primary goal should be to minimize latency while maximizing query efficiency.

For instance, in FalkorDB, low-latency querying is achieved by the efficient use of GraphBLAS algorithms, indexing and caching. This approach allows for rapid data retrieval and processing, significantly reducing the time and resources required for operations.

To optimize query performance in your system, you can leverage several proven techniques:

Single Composite Index

A composite index combines multiple properties into a single index, making it especially useful when queries frequently filter on all the indexed properties. By monitoring graph query logs to identify common and slow queries, you can create or modify composite indexes to address performance bottlenecks. This approach reduces the need for multiple indexes and streamlines queries involving conditions on several attributes.

Cardinality Reduction for Complex Path Queries

Cardinality refers to the number of unique elements in a dataset, such as nodes or relationships in a graph. Cardinality reduction involves decreasing the number of unique elements or distinct values in query results by eliminating duplicates or consolidating similar data.

When working with long paths in graph traversals, duplicate results can significantly impact performance. In GraphRAG systems, reducing cardinality is essential for efficiently processing semantic relationships. You can achieve this by:

- Employing eager duplicate elimination during intermediate stages of path traversal.

- Using distinct aggregations for multi-hop relationships.

- Batching similar semantic queries to minimize redundant processing.

For example, if you’re searching for documents across multiple relationship types, eliminating duplicates early in the query path can drastically reduce the number of large language model (LLM) operations required later in the pipeline.

Optimized Property Access Patterns

Optimizing property access is critical for GraphRAG systems, especially when dealing with text embeddings and semantic properties. By deferring property access until the final stages of query execution, aggregating nodes before accessing their properties, and caching frequently accessed semantic properties, you can significantly boost system performance.

You can also use materialized views to accelerate query rates. Research has demonstrated that this approach can achieve speedups of up to nearly 100 times for individual queries and up to 28.71 times for entire workloads, making it a powerful technique for optimizing GraphRAG systems.

Integration with Large Language Models (LLMs)

Large language models (LLMs) and graph databases can work synergistically when managed effectively. LLMs can translate complex human queries into efficient, structured graph database queries, such as Cypher queries, enhancing the performance of RAG systems.

LLMs can also assist with database maintenance by analyzing graph schemas and query patterns, then recommending optimizations for nodes and relationships. They can identify frequently traversed paths, suggest index creation, and propose relationship restructuring based on query and access patterns.

However, using LLMs in the context of graph creation or querying comes with associated costs that you should be aware of and know how to optimize.

Cost of LLM-Powered Graph Creation

To understand the costs associated with integrating LLMs, let’s first break down their primary applications in GraphRAG.

Building the Graph

This is where the majority of the costs arise. LLMs are employed to analyze text, particularly unstructured data, to determine nodes and relationships within the graph.

For an estimation of token usage per call, consider this few-shot prompt used by Microsoft’s version of GraphRAG.

Goal-

Given a text document that is potentially relevant to this activity, first identify all entities needed from the text in order to capture the information and ideas in the text.

Next, report all relationships among the identified entities.

-Steps-

1. Identify all entities. For each identified entity, extract the following information:

- entity_name: Name of the entity, capitalized

- entity_type: Suggest several labels or categories for the entity. The categories should not be specific, but should be as general as possible.

- entity_description: Comprehensive description of the entity's attributes and activities

Format each entity as ("entity"{tuple_delimiter}<entity_name>{tuple_delimiter}<entity_type>{tuple_delimiter}<entity_description>

2. From the entities identified in step 1, identify all pairs of (source_entity, target_entity) that are *clearly related* to each other.

For each pair of related entities, extract the following information:

- source_entity: name of the source entity, as identified in step 1

- target_entity: name of the target entity, as identified in step 1

- relationship_description: explanation as to why you think the source entity and the target entity are related to each other

- relationship_strength: a numeric score indicating strength of the relationship between the source entity and target entity

Format each relationship as ("relationship"{tuple_delimiter}<source_entity>{tuple_delimiter}<target_entity>{tuple_delimiter}<relationship_description>{tuple_delimiter}<relationship_strength>)

3. Return output in English as a single list of all the entities and relationships identified in steps 1 and 2. Use **{record_delimiter}** as the list delimiter.

4. When finished, output {completion_delimiter}

-Examples-

######################

Example 1:

text:

It was very dark, and the wind howled horribly around her, but Dorothy

found she was riding quite easily. After the first few whirls around,

and one other time when the house tipped badly, she felt as if she were

being rocked gently, like a baby in a cradle.

Toto did not like it. He ran about the room, now here, now there,

barking loudly; but Dorothy sat quite still on the floor and waited to

see what would happen.

Once Toto got too near the open trap door, and fell in; and at first

the little girl thought she had lost him. But soon she saw one of his

ears sticking up through the hole, for the strong pressure of the air

was keeping him up so that he could not fall. She crept to the hole,

caught Toto by the ear, and dragged him into the room again, afterward

closing

------------------------

output:

("entity"{tuple_delimiter}DOROTHY{tuple_delimiter}CHARACTER, PERSON{tuple_delimiter}Dorothy is a character who experiences a dark and windy environment, feels as if being rocked gently, and actively participates in rescuing Toto)

{record_delimiter}

("entity"{tuple_delimiter}TOTO{tuple_delimiter}CHARACTER, ANIMAL{tuple_delimiter}Toto is Dorothy's dog who dislikes the situation, runs around barking, and accidentally falls into a trap door but is saved by Dorothy)

{record_delimiter}

("entity"{tuple_delimiter}TRAP DOOR{tuple_delimiter}OBJECT{tuple_delimiter}The trap door is an opening through which Toto falls, but the air pressure prevents him from falling completely)

{record_delimiter}

("relationship"{tuple_delimiter}DOROTHY{tuple_delimiter}TOTO{tuple_delimiter}Dorothy rescues Toto from the trap door, showing a caring relationship{tuple_delimiter}9)

{record_delimiter}

("relationship"{tuple_delimiter}TOTO{tuple_delimiter}TRAP DOOR{tuple_delimiter}Toto falls into the trap door, which is a pivotal moment for his character in this scene{tuple_delimiter}7)

{record_delimiter}

("relationship"{tuple_delimiter}DOROTHY{tuple_delimiter}TRAP DOOR{tuple_delimiter}Dorothy interacts with the trap door to rescue Toto, showing her proactive nature{tuple_delimiter}8)

{completion_delimiter}

#############################

-Real Data-

######################

text: {input_text}

######################

output:

To get an idea of the associated costs, let’s consider an example:

Document dataset size: Technical documentation spanning 45,000 words

Model selected: GPT-3.5 Turbo

Cost per word: $0.0000500

Cost: $0.0000500 * 45,000 = $2.25 USD to process your technical documents

This calculation can also be performed using the token count: 57,620 tokens * 0.0000393 = $2.26 USD.

For processing large datasets, such as 45,000 words, response times depend on the model’s latency per token and the system architecture. Empirical results suggest:

Latency per Token: ~50ms for GPT-3.5 Turbo (average).

Token Count: 57,620 tokens.

Estimated Total Latency:

57,620 tokens×50 ms/token = 2,881 seconds (48 minutes)

Querying

LLMs are also utilized during the query phase of a GraphRAG application. They translate natural language queries into Cypher queries, enabling the system to retrieve results from the graph database, which are then presented as context to the LLM for response generation.

Optimizing Cost of LLM Integration

The cost of using LLMs is heavily influenced by the platform you choose. You can opt to host open models like Llama 3.3 or use platforms like OpenAI. Costs vary depending on the selected model, with larger and more sophisticated models like GPT-4 incurring significantly higher per-token costs compared to smaller models like GPT-4o-mini.

To reduce latency, you can implement parallel processing and caching mechanisms, which can significantly decrease perceived response times.

Additionally, consider the following approaches for optimizing LLM usage:

Query Caching and Memoization

Caching enables systems to store and reuse results from previously processed queries, eliminating redundant computations. By caching API responses, you can reduce server load and bandwidth usage by up to 70%, while also improving response times for repeated queries. This approach enhances scalability during high-traffic periods by limiting redundant computations and network requests.

Prompt Engineering Optimization

Prompt engineering focuses on crafting precise and concise language templates that capture domain-specific nuances, reducing token consumption without sacrificing query comprehension. Well-designed prompts minimize contextual overhead by employing targeted and efficient language structures, streamlining LLM interactions and reducing the computational resources required for query generation.

Intelligent Query Decomposition

Intelligent query decomposition involves breaking down complex queries into smaller, more manageable semantic units. This enables more targeted and efficient interactions with LLMs, improving both query execution and overall system performance.

Efficient Knowledge Representation

Designing effective index structures for knowledge graphs is crucial for enhancing the performance of graph databases, especially as their size and complexity increase.

Several strategies can be employed to create efficient indexes, ensuring that queries remain fast and low-latency. These strategies are typically integrated within the graph database itself. For example, the high-performance graph database FalkorDB uses sparse matrices to represent graph adjacency matrices.

Adjacency Lists

Adjacency lists represent graph structures by maintaining a list of connections for each node. They are particularly effective for sparse graphs, as they store only existing connections. Using adjacency lists reduces memory overhead and enables rapid relationship traversal.

B-Trees: Optimizing Hierarchical Data Access

B-Trees are self-balancing tree structures designed to efficiently manage large-scale, hierarchical data. They offer logarithmic-time complexity for insertions, deletions, and lookups, ensuring consistent performance across massive graph databases. With B-Trees, each node can store multiple keys in sorted order, making them ideal for range queries and multi-dimensional indexing of graph properties.

Hash Tables: Accelerating Direct Data Retrieval

Hash tables use hash functions to convert complex graph keys into simple array indices, enabling near-constant-time access to graph elements. By mapping node or edge properties directly to memory locations, hash tables eliminate the need for sequential searching. While careful design is required to handle hash collisions, well-constructed hash tables are highly effective for rapid retrieval of unique identifiers in real-time knowledge base queries.

Knowledge Graph Embedding (KGE): Semantic Intelligence in Indexing

Knowledge Graph Embedding (KGE) transforms complex graph structures into low-dimensional vector spaces using machine learning techniques. By capturing semantic relationships and node characteristics, KGE enables advanced operations such as semantic similarity searches and link predictions. This approach becomes particularly powerful when combining knowledge graphs with vector similarity search operations.

Granularity of Knowledge Representation

The granularity of your knowledge representation must strike a balance between semantic expressiveness and computational efficiency. When modeling entities as nodes and semantic relationships as edges, it’s crucial to avoid extremes. Representations that are too fine-grained can increase traversal complexity and storage overhead, while overly coarse modeling may reduce semantic precision and query flexibility.

Example: Storing Properties in a Graph

- Node-Attached Properties:

Properties can be directly attached to nodes. This simpler approach facilitates quick information lookups and is suitable for straightforward use cases. - Property as Separate Nodes: Alternatively, important properties can be modeled as separate nodes, connected with specific relationship types. Although more complex, this approach enables the creation of detailed models and better logical connections between different data elements.

For example:

Instead of storing “birth_date: 1990” as a property on a Person node, you could create a separate Birth node with a date property and link it to the Person node using a relationship like “HAS_BIRTH_EVENT”. This approach offers greater flexibility in how you connect and utilize the information.

The choice between these approaches depends on your specific use case. For instance, when storing vector embeddings, both node-based and property-based storage of embeddings can be viable.

Modern graph databases, such as FalkorDB, often optimize for both approaches using specialized vector indices and graph query operators, ensuring flexibility and efficiency.

Additional Approaches

Several more advanced strategies exist for reducing GraphRAG indexing costs.

Advanced Indexing Strategies

Wind-Bell Indexing

Wind-Bell Indexing optimizes graph search by precomputing paths between frequently queried node pairs. This approach allows for the direct retrieval of shortest paths or distances without requiring full traversal. It leverages landmarks by selecting critical nodes and precomputing distances to other nodes, while hop labels store the shortest paths or distances to these landmarks for fast retrieval.

To enhance efficiency, Wind-Bell Indexing incorporates query optimization by combining precomputed data with runtime filtering, refining and expediting query results. This hybrid approach makes it particularly effective for large-scale graphs with frequent queries on specific node pairs.

Tree-Decomposition-Based Indexing

Tree-decomposition-based indexing represents a graph as a tree structure to capture its hierarchical properties. This method is especially effective for graphs with low treewidth, such as planar graphs or specific types of sparse networks, where the structure can be efficiently managed.

The process involves decomposing the graph into a tree-like format, where each “tree node” represents a subgraph. These tree nodes are then indexed individually, enabling efficient traversal and subgraph query resolution without requiring traversal of the entire graph. This approach significantly reduces computational overhead for certain query types.

Trie-Based Indexing for Subgraph Matching

Frequent subgraphs or motifs can be efficiently represented in a trie structure to enable rapid pattern matching. This method involves extracting frequent subgraphs and hierarchically indexing them in a trie, which organizes data to facilitate the fast identification and retrieval of matching patterns.

Joint Autotuning of Configuration Parameters

Graph databases offer numerous performance-related parameters, but manually tuning them is both time-consuming and costly. To address this, JointConf has been proposed as a novel approach for automatically auto-tuning configuration parameters in modularized graph databases. It utilizes the BO_dropout algorithm for high-dimensional Bayesian optimization, enhancing performance across multiple modules. JointConf outperforms traditional search-based methods, highlighting the necessity of joint autotuning in modularized graph databases for achieving optimal performance.

Dynamic Structural Graph Clustering Algorithms

Dynamic structural graph clustering algorithms are designed to manage large, evolving graph databases (GDBs) where graph structures frequently change due to updates such as edge additions or deletions. These algorithms group similar subgraphs or motifs and adapt to structural changes efficiently.

Approaches like DynELM and DynStrClu are particularly effective, using advanced techniques such as dimension dropout for Bayesian optimization to process updates more efficiently and improve scalability. Additionally, algorithms like StruClus employ representative subgraphs to describe clusters, enhancing interpretability. These methods leverage maximal frequent subgraph mining and stochastic sampling to identify relevant structures in large datasets effectively, improving both performance and usability.

Dynamic clustering techniques are essential for applications like fraud detection, social network analysis, and chemical structure analysis, where graphs are vast, highly interconnected, and continually evolving.

Final Thoughts

Reducing high GraphRAG indexing costs requires a multifaceted approach. By optimizing query performance, integrating with LLMs, and employing efficient knowledge representation, you can improve performance, lower costs, and enhance query accuracy. These strategies provide practical benefits, including multi-graph tenancy and ease of use, making them invaluable for managing complex, interconnected data.

References

What are GraphRAG Indexing Costs?

How can I optimize GraphRAG indexing?

Why integrate LLMs with GraphRAG systems?

Build fast and accurate GenAI apps with GraphRAG SDK at scale

FalkorDB offers an accurate, multi-tenant RAG solution based on our low-latency, scalable graph database technology. It’s ideal for highly technical teams that handle complex, interconnected data in real-time, resulting in fewer hallucinations and more accurate responses from LLMs.