Highlights

Retrieval-augmented generation (RAG) has emerged as a powerful technique to address key limitations of large language models (LLMs). By augmenting LLM prompts with relevant data retrieved from various sources, RAG ensures that LLM responses are factual, accurate, and free from hallucinations.

- GraphRAG extends the capabilities of RAG by using knowledge graphs to represent information, allowing it to handle complex queries that require multi-hop reasoning rather than just relying on semantic similarity.

- Unlike vector-based retrieval-augmented generation (VectorRAG) which struggles with complex queries, GraphRAG leverages the structured relationships in knowledge graphs to enable advanced reasoning and provide more relevant answers.

- GraphRAG systems offer significant benefits, including enhanced knowledge representation, reduced hallucinations, and greater scalability and efficiency, often requiring fewer tokens for LLM response generation.

- The architecture of a GraphRAG system involves constructing a knowledge graph from raw data, processing user queries to match the graph, and using an LLM to generate a coherent response grounded in that retrieved information.

However, the accuracy of RAG systems heavily relies on their ability to fetch relevant, verifiable information. Naive RAG systems, built using vector store-powered semantic search, often fail in doing so, especially with complex queries that require reasoning. Additionally, these systems are opaque and difficult to troubleshoot when errors occur.

In this article, I explore GraphRAG, a superior approach for building RAG systems. GraphRAG is explainable, leverages graph relationships to discover and verify information, and has emerged as a frontier technology in modern AI applications.

Explainability

Knowledge graphs offer a clear advantage in making AI decisions more understandable. By visualizing data as a graph, users can navigate and query information seamlessly. This clarity allows for tracing errors and understanding provenance and confidence levels—critical components in explaining AI decisions. Unlike traditional LLMs, which often provide inscrutable outputs, knowledge graphs illuminate the reasoning logic, ensuring that even complex decisions are comprehensible.

What is GraphRAG?

GraphRAG is a RAG system that combines the strengths of knowledge graphs and large language models (LLMs). In GraphRAG, the knowledge graph serves as a structured repository of factual information, while the LLM acts as the reasoning engine, interpreting user queries, retrieving relevant knowledge from the graph, and generating coherent responses.

Emerging research shows that GraphRAG significantly outperforms vector store-powered RAG systems. Research has also shown that GraphRAG systems not only provide better answers but are also cheaper and more scalable.

To understand why, let’s look at the underlying mechanics of how knowledge is represented in vector stores versus knowledge graphs.

Understanding RAG: The Foundation of GraphRAG

RAG, a term first coined in a 2020 paper, has now become a common architectural pattern for building LLM-powered applications. RAG systems use a retriever module to find relevant information from a knowledge source, such as a database or a knowledge base, and then use a generator module (powered by LLMs) to produce a response based on the retrieved information.

How RAG Works: Retrieval and Generation

During the retrieval process in RAG, you find the most relevant information from a knowledge source based on the user’s query. This is typically achieved using techniques like keyword matching or semantic similarity. You then prompt the generator module with this information to generate a response using LLMs.

In semantic similarity, for instance, data is represented as numerical vectors generated by AI embeddings models, which try to capture its meaning. The premise is that similar vectors lie closer to each other in vector space. This allows you to use the vector representation of a user query to fetch similar information using an approximate nearest neighbor (ANN) search.

Keyword matching is more straightforward, where you use exact keyword matches to find information, typically using algorithms like BM25.

Limitations of RAG and How GraphRAG Addresses Them

Naive RAG systems built with keyword or similarity search-based retrieval fail in complex queries that require reasoning. Here’s why:

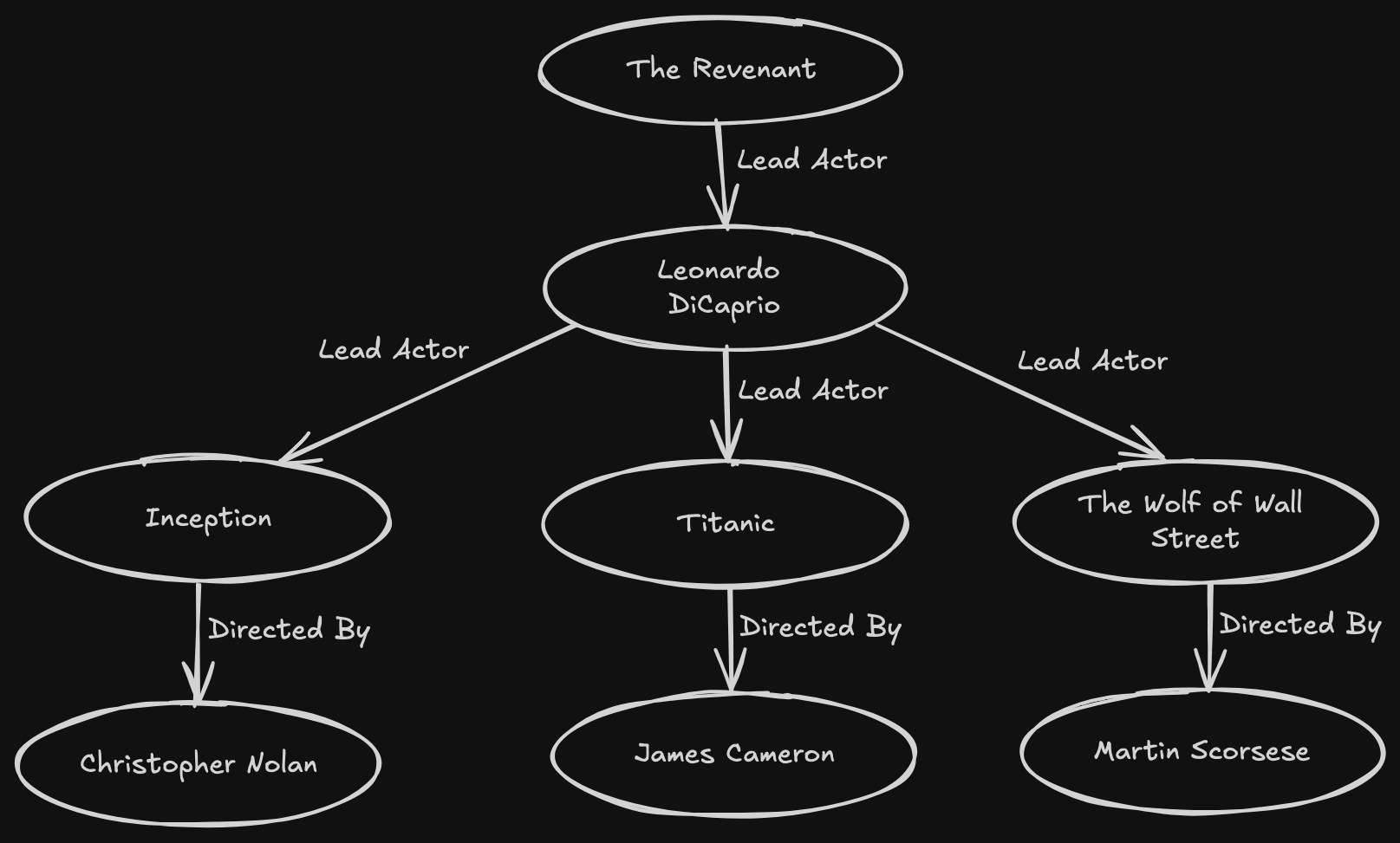

Suppose the user asks a query: Who directed the sci-fi movie where the lead actor was also in The Revenant?

A standard RAG system might:

- Retrieve documents about The Revenant.

- Find information about the cast and crew of The Revenant.

- But fail to identify that the lead actor, Leonardo DiCaprio, starred in other movies and subsequently determine their directors.

Queries such as the above require the RAG system to reason over structured information instead of relying purely on keyword or semantic search.

The process should ideally be:

- Identify the lead actor.

- Traverse the actor’s movies.

- Retrieve directors.

To effectively create systems that can answer such queries, you need a retriever that can reason over information.

Enter GraphRAG.

GraphRAG Benefits: What Makes It Unique?

Knowledge graphs capture knowledge through interconnected nodes and entities, representing relationships and information in a structured form. Research has shown that it is similar to how the human brain structures information.

Continuing the above example, the knowledge graph system would use the following graph to arrive at the right answer:

The GraphRAG response would then be: “Leonardo DiCaprio, the lead actor in ‘The Revenant,’ also starred in ‘Inception,’ directed by Christopher Nolan.”

Complex queries are natural to human interaction. They can arise in myriad domains, from customer chatbots to search engines, or when building AI agents. GraphRAG, therefore, has gained prominence as we build more user-facing AI systems.

GraphRAG systems offer numerous benefits over traditional RAG:

- Enhanced Knowledge Representation: GraphRAG can capture complex relationships between entities and concepts.

- Explainable and Verifiable: GraphRAG allows you to visualize and understand how the system arrived at its response. This helps with debugging when you get incorrect results.

- Complex Reasoning: The integration of LLMs enables GraphRAG to better understand the user’s query and provide more relevant and coherent responses.

- Flexibility in Knowledge Sources: GraphRAG can be adapted to work with various knowledge sources, including structured databases, semi-structured data, and unstructured text.

- Scalability and Efficiency: GraphRAG systems, built with fast knowledge graph stores like FalkorDB, can handle large amounts of data and provide quick responses. Researchers found that GraphRAG-based systems required between 26% and 97% fewer tokens for LLM response generation by providing more relevant data.

Common RAG Use Cases and Challenges

Does GraphRAG solve the use cases that typical RAG systems have to handle? Other RAG systems have found applications across various domains, including:

- Question Answering: Addressing user queries by retrieving relevant information and generating comprehensive answers.

- Summarization: Condensing lengthy documents into concise summaries.

- Text Generation: Creating different text formats (e.g., product descriptions, social media posts) based on given information.

- Recommendation Systems: Providing personalized recommendations based on user preferences and item attributes.

However, these systems often encounter challenges such as:

- Inaccurate Retrieval: Vector-based similarity search might retrieve irrelevant or partially relevant documents.

- Limited Context Understanding: Difficulty in capturing the full context of a query or document.

- Factuality and Hallucination: Potential generation of incorrect or misleading information.

- Efficiency: Resource-intensive processes due to massive amounts of vector data, especially for large-scale applications.

In fact, researchers have identified numerous failure points that traditional RAG systems suffer from.

Vector-based Retrieval-Augmented Generation (RAG) and fine-tuning are techniques used to enhance the accuracy of AI-generated responses. Both methods may improve the likelihood of generating correct answers by guiding the AI with more context and adjustments. However, they differ in how they achieve this and in their limitations.

VectorRAG

- Enhancement of Accuracy: RAG utilizes existing information from extensive datasets to retrieve relevant facts, thus increasing the probability of delivering a correct answer.

- Limitations: Although it boosts accuracy, it doesn’t guarantee certainty. The answers may still lack depth, context, and sometimes don’t align perfectly with an individual’s known truth.

Fine-tuning

- Personalization: This technique adjusts the model based on specific datasets, making it more attuned to particular types of queries.

- Drawbacks: Similar to RAG, fine-tuning doesn’t assure the precision of the answer. There’s often a complexity in understanding why the model chooses certain responses, leaving users without explicit reasoning.

Both techniques face a common ceiling: they incrementally improve answer accuracy but fall short of guaranteeing a complete, contextually rich, and authoritative response. Each offers partial solutions but lacks the ability to provide definitive certainty and comprehensive context in every scenario.

How GraphRAG Addresses the Limitations of Other RAGs

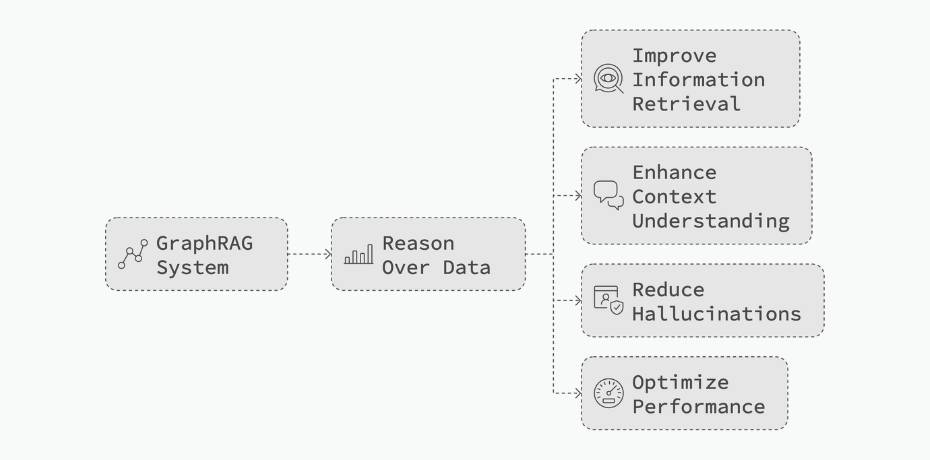

GraphRAG addresses many of the limitations listed above, as it can reason over data. A GraphRAG system can:

- Improve Information Retrieval: By understanding the underlying connections between entities, GraphRAG can more accurately identify relevant information.

- Enhance Context Understanding: Knowledge graphs provide a richer context for query understanding and response generation.

- Reduce Hallucinations: By grounding responses in factual knowledge, GraphRAG can mitigate the risk of generating false information.

- Optimize Performance: Vector stores can be expensive, especially for large-scale datasets. Knowledge graphs can often be far more efficient.

Why GraphRAG is the Next Natural Step for RAG in GenAI Applications

The integration of language learning models (LLMs) and vector-based retrieval-augmented generation (RAG) technologies has made strides in generating quality results by focusing on word-based computations. However, to elevate those results from good to consistently great, it’s crucial to advance to string processing and begin incorporating a more comprehensive world model along with the existing word model.

This approach mirrors what search engine learned when they advanced beyond simple text analysis. By mapping the web of concepts and entities beneath the words, they refined search capabilities and precision. Similarly, the AI landscape is adopting a similar path, where the role of GraphRAG is becoming pivotal.

One reason GraphRAG stands as the natural evolution is the way technological progress tends to unfold in S-curves. As one technology reaches its peak, another emerges, offering novel pathways for progress. As generative AI (GenAI) matures, applications where the quality of answers is paramount, or where stakeholders demand understandable and transparent processes, see GraphRAG as essential. This is also true for scenarios requiring meticulous control over data access, ensuring privacy and security. In these contexts, it’s likely that your next GenAI application will leverage a knowledge graph to achieve these ambitious goals.

GraphRAG Architecture: A Deeper Look

Now that we know how GraphRAG improves upon naive RAG, let’s examine its underlying architecture.

Key Components of GraphRAG Architecture

Now that we know how GraphRAG improves upon naive RAG, let’s examine its underlying architecture.

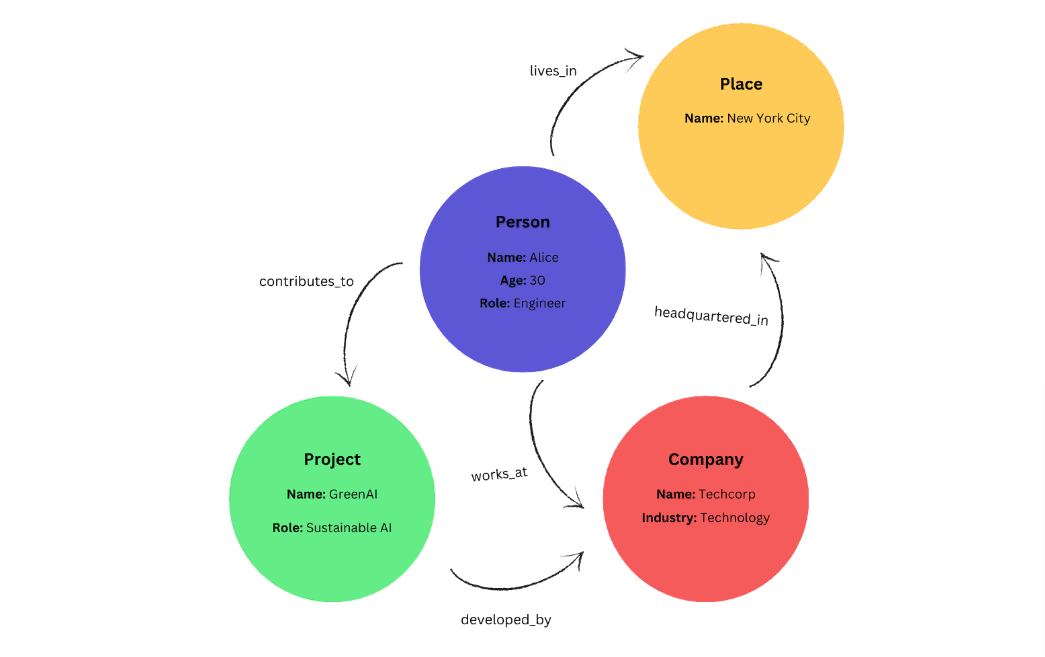

- Knowledge Graph: A structured representation of information, capturing entities and their relationships.

- Graph Database: A mechanism to compare the query graph with the knowledge graph.

- LLM: A large language model capable of generating text based on provided information.

To create a GraphRAG, you typically build a system that performs the following steps:

Knowledge Graph Construction

- Document Processing: Raw text documents are ingested and processed to extract relevant information.

- Entity and Relationship Extraction: Entities (people, places, objects, concepts) and their relationships are identified within the text.

- Graph Creation: Extracted entities and relationships are structured into a knowledge graph, representing the semantic connections between data points.

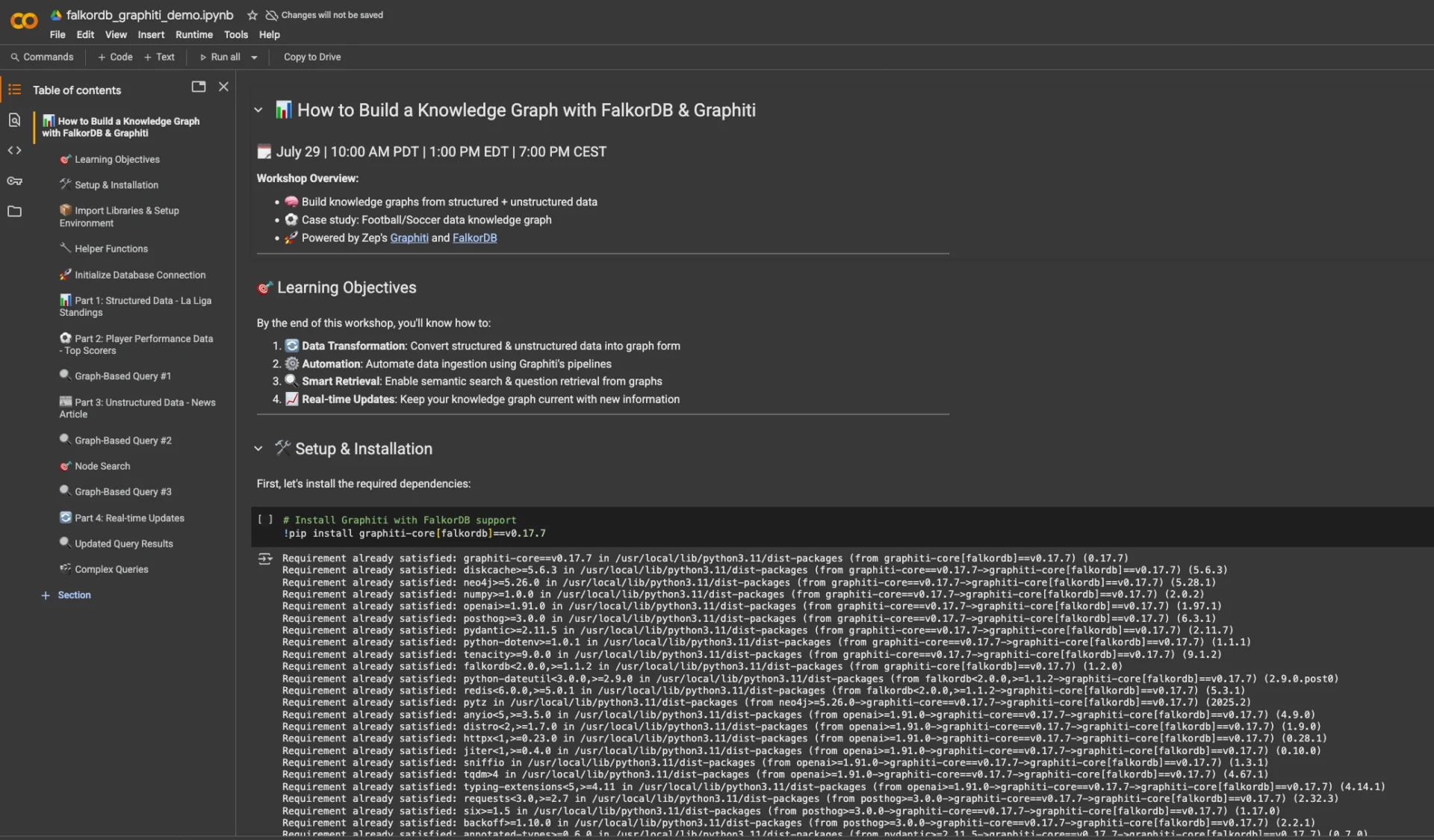

Example: Building and Populating a Knowledge Graph from Research Papers

Let’s bring this all together with a practical example: turning a collection of research papers into a fully formed knowledge graph. This process is made accessible through a variety of open-source frameworks and libraries, including popular options like Graphiti, LangChain, LlamaIndex, and ready-made pipelines that streamline many of the tedious steps.

Step-by-Step Pipeline

A typical approach to constructing a knowledge graph from documents looks like this:

Setup the Pipeline

- Choose your orchestration pipeline or toolkit.

- Configure essential components such as:

- A large language model (like GPT-4o)

- An embedding model to understand semantic meaning

- A document splitting tool to chunk the content for efficient processing

- A graph schema that defines which entities and relationships to extract

Processing the Documents

- Feed in a set of research papers—these could be PDF files, text exports, or other document formats.

- Each document is divided into manageable chunks, ensuring thorough coverage without overwhelming the extraction models.

Entity and Relation Extraction

- The language model and embedder work in tandem to scan each chunk for relevant entities (such as anatomical parts, biological processes, treatments), and key relationships connecting them (e.g., “activates,” “affects,” “treats”).

- Rather than relying on static keywords, these tools use deep semantic understanding to recognize relationships across scientific language and domain-specific jargon.

Graph Construction

- Identified entities become nodes, while the detected relationships form the edges between them.

- The pipeline populates the graph database, structuring the information for easy traversal and query.

Visualization and Querying

- Once everything is loaded, the resulting knowledge graph can be explored—either with built-in visualization tools or by crafting graph queries for deeper inspection.

Example in Action

Links and Edges in Knowledge Graphs

links and edges are crucial components that define relationships between data points, known as nodes.

What Are Links?

Links are conceptual connections between nodes. Each node may have zero or more links that associate it with other nodes. These links serve as gateways, allowing nodes to establish relationships based on shared characteristics or data attributes.

How Do Edges Work?

An edge is an actual connection that manifests when two nodes share a common link. Think of links as the potential for connection, while edges are the realization of that connection.links and edges are crucial components that define relationships between data points, known as nodes.

Query Processing

- Query Understanding: The user’s query is analyzed to extract key entities and relationships.

- Query Graph Generation: A query graph is constructed based on the extracted information, representing the user’s intent.

Graph Matching and Retrieval

- Graph Similarity: The query graph is compared to the knowledge graph to find relevant nodes and edges.

- Document Retrieval: Based on the graph-matching results, relevant documents are retrieved for subsequent processing.

Response Generation

- Contextual Understanding: The retrieved documents are processed to extract relevant information.

- Response Generation: An LLM generates a response based on the combined knowledge from the retrieved documents and the knowledge graph.

When using GraphRAG to answer questions, there are two main query approaches to consider:

Global Search

This mode is ideal for responding to broad, overarching questions. It works by tapping into community-generated summaries, providing insights that encompass the entire body of information.

Local Search

For more targeted queries about specific topics or entities, Local Search is the key. It dives deeper by examining closely related elements and their associated concepts, ensuring a thorough exploration of the topic.

Both of these strategies leverage the data structure effectively to enhance the context provided to large language models when generating answers.

Implementing GraphRAG: Strategies and Best Practices

The cornerstone of a successful GraphRAG system is a meticulously constructed knowledge graph. The deeper and more accurate the graph’s representation of the underlying data, the better the system’s ability to reason and generate high-quality responses. Here are some of the key factors you should keep in mind:

Knowledge Graph Construction

- Data Quality: Ensure data is clean, accurate, and consistent to build a reliable knowledge graph.

- Graph Database Selection: Choose a suitable graph database (e.g., FalkorDB), which is efficient and scalable.

- Schema Design: Define the schema for the knowledge graph. Consider entity types, relationship types, and properties.

- Graph Population: Efficiently populate the graph with LLM-extracted entities and relationships from the underlying data.

Query Processing and Graph Matching

- Query Understanding: Use an appropriate LLM to extract key entities and relationships from user queries.

- Retrieval and Reasoning: Ensure that the graph database can find relevant nodes and edges in the knowledge graph based on your Cypher queries.

LLM Integration

- LLM Selection: Choose an LLM that can understand and generate Cypher queries. OpenAI’s GPT4o, Google’s Gemini, or larger Llama 3.1 or Mistral models work well.

- Prompt Engineering: Craft effective prompts to guide the LLM in generating desired outputs from knowledge graph responses.

- Fine-Tuning: Consider fine-tuning the LLM on specific tasks or domains for improved performance.

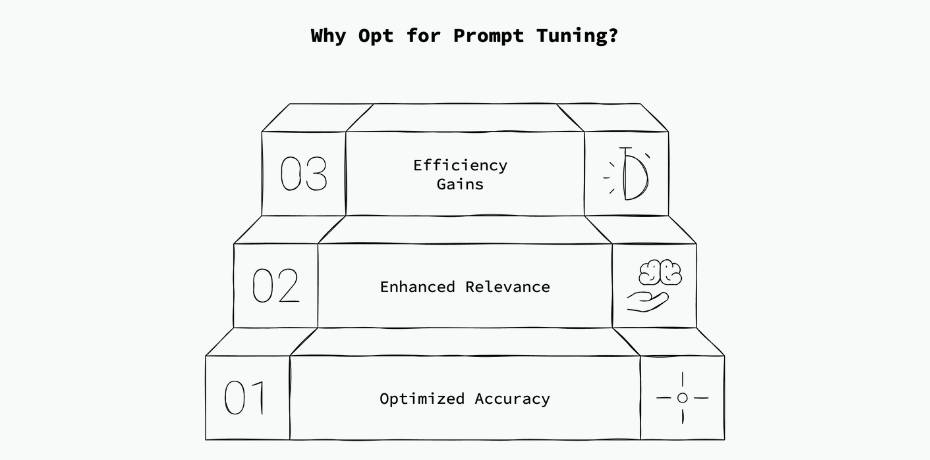

Why Opt for Prompt Tuning?

- Optimized Accuracy: Tailoring prompts helps ensure the tool better understands your specific data context. This customization minimizes errors and improves the accuracy of the results.

- Enhanced Relevance: Fine-tuning your prompts allows GraphRAG to provide results that are more relevant to your unique datasets, thereby increasing the overall utility of the tool.

- Efficiency Gains: Adjusting prompts can streamline processes, making interactions more efficient and reducing the need for repetitive corrections or re-queries.

Evaluation and Iteration

- Metrics: Define relevant metrics to measure the performance of the GraphRAG system (e.g., accuracy, precision, recall, F1-score). Use systems like Ragas to evaluate your GraphRAG performance.

- Visualize and Improve: Monitor system performance, visualize your graph, and iterate on the knowledge graph, query processing, and LLM components.

GraphRAG Tools and Frameworks

A number of open-source tools are emerging that simplify the process of creating a knowledge graph and GraphRAG application. GraphRAG-SDK, for instance, leverages FalkorDB and OpenAI to enable advanced construction and querying of knowledge graphs. It allows:

- Schema Management: You can define and manage knowledge graph schemas, either manually or automatically from unstructured data.

- Knowledge Graph: Construct and query knowledge graphs.

- OpenAI Integration: Integrates seamlessly with OpenAI for advanced querying.

Using GraphRAG-SDK, the process of creating a knowledge graph is as simple as this:

# Auto generate graph schema from unstructured data

sources = [Source("./data/the_matrix.txt")]

s = Schema.auto_detect(sources)

# Create a knowledge graph based on schema

g = KnowledgeGraph("IMDB", schema=s)

g.process_sources(sources)

…and then, you can query the graph:

# Query your data

question = "Name a few actors who've acted in 'The Revenant'"

answer, messages = g.ask(question)

print(f"Answer: {answer}")

As simple as that. To install and use it in your application, visit the GraphRAG-SDK repository.

Many popular frameworks, such as LangChain and LlamaIndex, have begun incorporating knowledge graph integrations to help you build GraphRAG applications. Modern LLMs are also constantly evolving to construct knowledge graphs and handle Cypher queries better.

Exploring GraphRAG Varieties

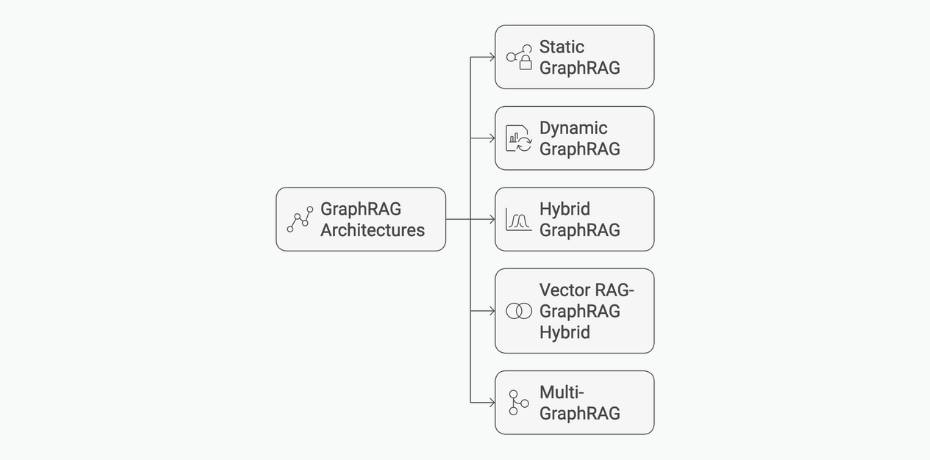

Several variations of GraphRAG architectures have emerged in the last few months, each with its own strengths and weaknesses. Let’s look at some of them.

- Static GraphRAG: Employs a pre-built, fixed knowledge graph that remains unchanged during query processing. This approach is suitable for domains with relatively stable information.

- Dynamic GraphRAG: Constructs or updates the knowledge graph on-the-fly based on incoming data or query context. This is advantageous for domains with rapidly evolving information.

- Hybrid GraphRAG: Combines elements of both static and dynamic knowledge graphs. It leverages a core static graph supplemented with dynamic updates. This approach balances the stability of static graphs with the relevance of dynamic data.

- Vector RAG-GraphRAG Hybrid: Combines traditional RAG with GraphRAG for improved performance. This approach can leverage the strengths of both techniques, such as using vector search for initial retrieval and then refining results with graph-based reasoning.

- Multi-GraphRAG: Utilizes multiple knowledge graphs to address different aspects of a query. This can be beneficial for complex domains with multiple knowledge sources. The optimal GraphRAG architecture would depend on your specific use case. For example, a dynamic domain with a substantial knowledge base might benefit from a Hybrid GraphRAG approach. Conversely, when leveraging semantic similarity is crucial, you should consider the RAG-GraphRAG hybrid.

When to Use GraphRAG

Several variations of GraphRAG architectures have emerged in the last few months, each with its own strengths and weaknesses. Let’s look at some of them.

GraphRAG is particularly well-suited for scenarios where:

- Complex Queries: Users require answers that involve multiple hops of reasoning or intricate relationships between entities.

- Factual Accuracy: High precision and recall are essential, as GraphRAG can reduce hallucinations by grounding responses in factual knowledge.

- Rich Contextual Understanding: Deep understanding of the underlying data and its connections is required for effective response generation.

- Large-Scale Knowledge Bases: Handling vast amounts of information and complex relationships efficiently is crucial.

- Dynamic Information: The underlying data is constantly evolving, necessitating a flexible knowledge representation.

Specific GraphRAG Use Cases

- Financial Analysis and Reporting: Understanding complex financial relationships and generating insights.

- Legal Document Review and Contract Analysis: Extracting key information and identifying potential risks or opportunities.

- Life Sciences and Healthcare: Analyzing complex biological and medical data to support research and drug discovery.

- Customer Service: Providing accurate and informative answers to complex customer inquiries.

Essentially, GraphRAG is a powerful tool for domains that require a deep understanding of the underlying data and the ability to reason over complex relationships.

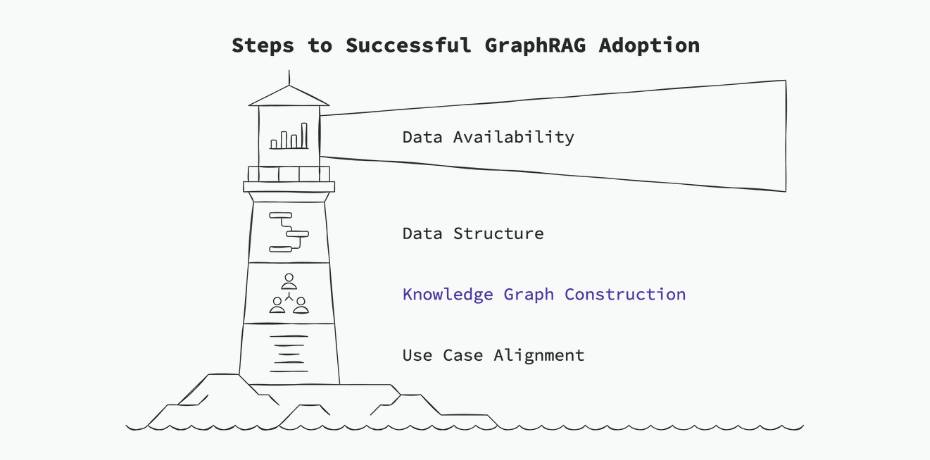

Factors to Consider for GraphRAG Adoption

Successful GraphRAG implementation hinges on data quality, computational resources, expertise, and cost-benefit analysis.

- Data Availability: Sufficient and high-quality data is essential for building a robust knowledge graph.

- Data Structure: Domains rich in structured information, such as finance, healthcare, or supply chain, are prime candidates for GraphRAG.

- Knowledge Graph Construction: The ability to efficiently extract entities and relationships from data using LLMs or other tools is crucial.

- Use Case Alignment: GraphRAG excels in scenarios demanding complex reasoning and deep semantic understanding.

Research Trends

The research around GraphRAG can evolve in several promising directions:

- Enhanced Knowledge Graph Construction: Developing more efficient and accurate methods for creating knowledge graphs, including techniques for handling noisy and unstructured data.

- Multimodal GraphRAG: Expanding GraphRAG to incorporate multimodal data, such as images, videos, and audio, to enrich the knowledge graph and improve response quality.

- Explainable GraphRAG: Developing techniques to make the reasoning process of GraphRAG more transparent and understandable to users, such as graph visualization.

- Large-Scale GraphRAG: Scaling GraphRAG to handle massive knowledge graphs and real-world applications.

- GraphRAG for Specific Domains: Tailoring GraphRAG to specific domains, such as programming, healthcare, finance, or legal, to achieve optimal performance.

GraphRAG represents a significant advancement in how we build LLM-powered applications. By integrating knowledge graphs, GraphRAG overcomes many limitations of traditional RAG systems, enabling more accurate, informative, and explainable outputs. As research progresses, we can anticipate even more sophisticated and impactful applications of GraphRAG across various domains. The future of information retrieval and question-answering lies in the convergence of knowledge graphs and language models.

If you are ready to experience the power of GraphRAG, try building your own GraphRAG solution with FalkorDB and GraphRAG-SDK today.

FAQ

Editor’s note: The following FAQ has been updated to reflect current trends and best-practices in August 2025.

What sources of data can be used to construct or enhance knowledge graphs?

At the heart of an effective GraphRAG system lies the quality and diversity of its knowledge graph. Building this foundation means drawing from a variety of information sources to ensure rich, accurate, and meaningful connections.

Graph Enrichment Sources: Improve your knowledge graphs by computing new relationships or attributes. This includes techniques like topic clustering, similarity scoring, and algorithms. Such enhancements can help surface hidden patterns and improve response relevance.

What are the three key phases of RAG?

The three phases of RAG: Retrieval, Augmentation, and Generation.

Every RAG system is comprised of three essential phases: retrieval, augmentation, and generation. Understanding these steps is crucial before diving deeper into RAG.

Retrieval:

The process begins with seeking out relevant information in response to a user query. This typically involves searching databases, document collections, or knowledge bases using techniques such as keyword search, semantic similarity, or vector-based retrieval. The aim is to find the most pertinent snippets of data that can help answer the question at hand.

Augmentation:

Once the relevant information has been gathered, the next step is to enhance the original user query with this supporting data. The retrieved content is thoughtfully blended with the question, often accompanied by additional instructions or context. This forms a detailed prompt that gives the language model a focused set of facts to work with, steering the response generation toward accuracy and relevance.

Generation:

With the context-rich prompt in hand, the language model gets to work. Instead of relying solely on its built-in pretraining, it crafts a response grounded in the freshly provided information. This step ensures the output remains tethered to external, up-to-date knowledge, rather than regurgitating stale or generic answers. The generated response can also include citations or metadata for added transparency.

What is agentic traversal?

Agentic traversal is an approach to information retrieval where a large language model (LLM) acts much like a seasoned detective, charting out a plan and strategically deciding which tools or “retrievers” to use for each information need.

Rather than relying on a single search or method, the LLM maps out a sequence of steps, choosing from multiple retrieval methods (such as keyword search, graph queries, or semantic similarity lookups) and executing each as needed.

This process unfolds dynamically: the LLM determines which retrievers might yield the most relevant pieces at different stages, coordinates their use in a logical order, and aggregates the discoveries to build a complete answer.